Lessons from e-Learning courses in healthcare:

A scoping review

urn:nbn:de:0009-5-57461

Abstract

Purpose: This paper provides an overview of studies that integrate adult learners’ perceptions on e-Learning courses related to healthcare, by identifying and describing characteristics and key factors of these courses and by delineating factors that should be considered when designing e-Learning courses for healthcare practitioners.

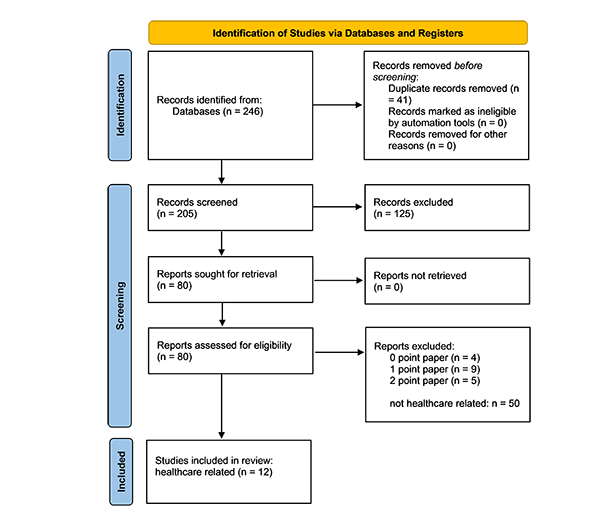

Methods: A scoping review was conducted of studies evaluating university level e-Learning courses in healthcare disciplines (2010–2020), following the PRISMA-ScR method for data identification, screening, selection and extraction. The data was prepared according to the study, participants, and course characteristics as well as the evaluation of learners’ success, engagement and perceptions.

Findings: Of the 246 identified studies, 12 met the inclusion criteria for this study. The evaluation of the e-Learning courses in the sample was generally positive for both online and hybrid models for all outcome variables. Three factors influencing the structure, process and outcome quality of e-learning courses stood out: the functionality of the learning management system (LMS) (i.e., structure quality); the importance of real-time interaction and feedback (i.e., process and outcome quality); and the influence of initial expectations on process and outcome quality.

Originality: A substantial body of research has addressed issues related to outcomes and course design across e-Learning courses. Yet, there is no consensus on what can be defined as a successful, high-quality e-Learning course from an adult learner’s perspective. This research highlights factors to consider when designing e-Learning courses for healthcare practitioners that integrates the adult learner’s perspective.

Keywords: e-learning; distance learning; adult education; higher education; healthcare; PRISMA-ScR method

Healthcare sciences are unique in higher education, requiring learners to critically reflect on and apply their acquired theoretical and practical knowledge (e.g., assessment, intervention or counselling skills) to clinical cases. These clinical reasoning skills are crucial in order to deliver high-quality, evidence-based healthcare services (Dollaghan, 2007). Traditionally, educational courses in healthcare have employed in-person educational models, yet modern technology has enabled online platforms for providing healthcare education.

Broadly speaking, learning in an online environment (i.e., e-Learning) can be defined as, “using the internet as a communication medium where the instructor and learners are separated by physical distance” (Al-hawari and Al-halabi, 2010, p. 2). In recent years, e-Learning has emerged as an innovative, enriching instructional delivery approach where learning is supported by information and communication technology (ICT) (Ameen et al., 2019; Al-Samarraie et al., 2018). Fast-tracked by the global COVID-19 pandemic, e-Learning in higher education (including healthcare education) has increased exponentially (Downer et al., 2021; Moawad, 2020) and facilitating effective learning in online environments has become a crucial component of contemporary teaching in higher education worldwide (Johnson et al., 2018; Syauqi et al., 2020).

Some of the well documented advantages of e-Learning for adults include improved flexibility of learning without time or geographical constraints, better compatibility with work and private life, and financial benefits (Al-Samarraie et al., 2018; Johnson et al., 2018; Kuo and Belland, 2016). Systematic reviews support the efficacy of e-Learning courses in higher education (Fontaine et al., 2019; Spanjers et al., 2015; Vaona et al., 2018) and specifically healthcare education for medical professionals (e.g., Downer et al., 2021; Reeves et al., 2017; Tang et al., 2018). However, the success of higher education e-Learning programmes is variable and is best evaluated using a variety of indicators which consider the role of the adult learner within the learning process (i.e., andragogy) (Muirhead, 2007; Reeves et al., 2017).

The success of e-Learning programmes can be objectively measured using performance outcomes on knowledge and skills tests. While pre-post assessments and exams can provide important formative information, learners’ subjective experiences and satisfaction with e-Learning are also key indicators of the quality of the e-Learning experience and outcomes (Cole et al., 2014; Rodrigues et al., 2019). Another important aspect of success in online learning is student engagement (i.e., the effort and commitment dedicated to their learning) which is documented as a prerequisite for effective learning and should therefore be considered in designing a course (Baker and Pittaway, 2012). For courses related to healthcare, design factors are particularly relevant to support learners’ development of clinical skills and to support a diverse student body that includes a substantial percentage of non-traditional learners (Dos Santos, 2020; Woodley and Lewallen, 2020).

In health education research within higher education, several scoping reviews have examined the evidence in online learning for primary healthcare (Downer et al., 2021; Reeves et al., 2017; Tang et al., 2019). These reviews have focused on evaluating online teaching approaches in primary healthcare (e.g., pre-med, midwifery) and the overall findings indicate that e-Learning can enhance the educational experience for healthcare practitioners. Yet, there is no consensus on what can be defined as a successful, high-quality e-Learning course from an adult learner’s perspective (i.e., impact of e-Learning on andragogy), what learning approaches and teaching models are mainly used within e-Learning courses (e.g., different forms of blended learning, synchronous vs. asynchronous instruction), and if there are certain e-Learning concepts that are more useful than others. More importantly, there is a lack of clear guidelines that need to be considered when designing e-Learning courses that are well-received by adult learners and that account for the learning approach, the course topic or target student group. To address this issue, we conducted a scoping review (Munn et al. 2018) to identify and describe learners’ perceptions of e-learning across e-learning programmes and the associated course characteristics and design. This work is part of a larger review in which we investigated e-Learning courses across disciplines. However, given the unique demands of healthcare education courses (Gerhardus et al. 2020, e.g., teaching applied skills and preparing learners to provide clinical care), this paper focusses on e-Learning courses in healthcare offered by institutions of higher education.

This scoping review sets three main objectives: 1) to provide an overview of studies published from 2010 - 2020 that integrate the learners’ perception on e-Learning courses in healthcare (by focussing on the research methodology, study design and quality, target population, and outcomes related to the learners’ perspectives); 2) to identify and describe characteristics and key factors of e-Learning courses for adult learners with regard to the course topic, the course organisation and content delivery, as well as teaching and assessment methods; and 3) to delineate factors that influenced learners’ e-Learning experiences and should be considered when designing e-Learning courses in healthcare professions. Despite the obvious challenge of heterogeneity in healthcare professions (Gerhardus et al. 2020), the results from this scoping review could contribute towards a best practice model when designing e-Learning courses for adult learners in healthcare-related professions, highlighting the learners’ perspective.

This scoping review is reported in accordance with the PRISMA-ScR statement (Tricco et al., 2018). Considering the rapid technical development, the requirement for high-quality publications, as well as the language skills of the authors, the following limitations were set as a first step: 1) the date of publication (2010–2020); 2) the publication type (peer-reviewed literature only); and 3) the language of the publication (English, German, French, Spanish).

In order to find eligible publications to address our research aims, search terms were prespecified according to SPIDER (Cooke et al., 2012) as a second step:

-

Sample: adult learners, university students, undergraduate and graduate students;

-

Phenomenon of Interest: e-Learning formats including online learning, e-Learning, web-based learning, massive open online courses (MOOCs), hybrid learning, and blended learning for learners in higher educational and continuing professional development contexts, accredited by an institution of higher learning;

-

Design: not restricted (qualitative, quantitative and mixed methods), evaluation: student satisfaction, student success, key principles, best practice, lessons learned, curriculum development; and

-

Research type: primary studies/research papers, all evidence levels.

Based on the search terms, a systematic literature search was completed in December 2020 using the EBSCOhost research platform (offering access to 31 databases including ERIC, MEDLINE, SocINDEX, PsycINFO via the Ludwig Maximilians University library service). Search terms were truncated where appropriate to ensure that all relevant studies were highlighted, and two BOOLEAN operators (AND & OR) were employed to optimise the search (Aliyu, 2017). Search terms on either title or full text level led to the following search strategy (search mode: all search terms):

TI (adult* OR “higher education*” OR “university student*” OR *graduate* OR “continuing professional*”) AND TI (e-Learning OR “online learning” OR “online education*” OR web-based OR “hybrid learning” OR “blended learning” OR MOOCS) AND TX (“student satisfaction” OR “student success” OR curriculum* OR “key principle*” OR “best practice*” OR “lesson* learned”).

To eliminate duplicates and to select and rate relevant literature, search results were imported into the free web application Rayyan QCRI (Ouzzani et al., 2016), a Library Systematic Review Service. The search results were gradually reduced to the final data sample (see Figure 1) through initial screening on title and abstract level followed by full-text eligibility checks, in both instances using an eligibility criteria checklist.

Six reviewers took part in this process, namely the three authors and three additional research assistants. To heighten reliability, the search results were randomly assigned to three pairs of reviewers, who blindly rated the studies. For the screening phase, the interrater agreement was 60%. Disagreements were discussed until 100% consensus was reached. For the eligibility phase, the reviewers were reassigned anew. A 5-point rating system, based on five questions related to our research aims (see Table 1), was used. Differences in the rating of more than two points were seen as disagreement and were found in 20% of the total data set. Supported by the judgment of a third independent reviewer, the disagreements were discussed until 100% consensus was reached. Papers rated by mutual agreement with 0–2 points were excluded, 3–5 points were included. The eligibility of papers rated with 2 points by one and 3 points by the other reviewer (n = 5) was discussed and decided on by the entire research team, which led to the exclusion of three papers.

|

Criteria |

Yes |

No |

|

|

1. |

Did the study describe the participants (e.g., number, age, educational background)? |

||

|

2. |

Was the study run by a university or institution of higher learning and/or receive ethical approval? |

||

|

3. |

Did the authors describe the learning approach for the online/hybrid programme (e.g., information about the format or structure of the course or class, teaching tools)? |

||

|

4. |

Were the outcomes of the study related to one or more of the following outcomes of interest: learner success, learner engagement, learner perception? |

||

|

5. |

Did the authors describe how the learning programme/course/class was evaluated (e.g., focus groups, questionnaire, multiple choice questions etc.)? |

||

|

Total |

|||

|

Overall rating = Number of Yes’s |

|||

Table 1. Overall study rating system on a 5-point scale for the eligibility phase

In order to derive information from high-quality studies, a critical appraisal was done for the eligible papers. Due to the expected heterogeneity of the studies, the Mixed Methods Appraisal Tool (MMAT) (Version 2018, Hong et al., 2018) was used. An overall quality score was awarded: a score of 5 indicated that 100% quality criteria was met; 4 = 80%; 3 = 60%; 2 = 40%; 1 = 20%. Only manuscripts with a score of 3 or higher were included in the final sample. The MMAT was conducted by independent research assistants who were not otherwise involved. The reliability was calculated for 20% of the final sample by the second author and agreement for the MMAT ratings was 92%.

Data was extracted using a custom-designed data extraction protocol that covered four sections: 1) Study characteristics (four items); 2) Participant characteristics (four items); 3) Course characteristics (20 items); and 4) Evaluation of learners’ success, engagement and/or perceptions (12 items). Hence, 40 items in total were completed for each paper (for full item list, including final coding rules, see Appendix 1). To ensure coding reliability, the data set was randomly assigned to the three authors. After coding one section, the authors discussed and resolved any uncertainties, refined the coding rules where necessary, and continued to the next section until all data was extracted. Each paper was read by at least two authors. An interrater agreement of >80% could be reached for the final coding whereby disagreements were resolved via consensus (= 100% consensus). Finally, the first author checked all of the coding sheets of the final data set. All individually extracted data was transferred into Microsoft Excel and synthesized on a descriptive level (i.e., frequencies of reported categories, inductive and deductive category formation in relation to the assessed quality dimension, course modality and learning approach). Items which were reported in less than 30% of the papers and/or reported on inconsistently, were excluded from further analysis as objective coding was not seen as feasible for these papers.

The data base search initially yielded 246 records. After duplicates were removed, n = 205 papers remained to be screened on title and abstract level of which n = 125 were excluded. Next, n = 80 papers were assessed for eligibility on full-text level and a further n = 18 studies were excluded. Of the remaining eligible papers (n = 62), n = 12 papers were identified as healthcare related and therefore included in this review. The 12 remaining eligible papers thus formed the final subsample from which data was extracted. Figure 1 shows the selection process and reasons for exclusion in a flow diagram according to the PRISMA 2020 statement (Page et al., 2021).

Table 2 gives an overview of the final sample set (N = 12), studies evaluating e-Learning courses within healthcare, as well as the quality appraisal (MMAT) scores.

Study and participants’ characteristics: As Table 2 shows, the sample represents studies from eight countries and six continents and covers a range of research approaches, including seven quantitative-, two qualitative-, and three mixed-methods designs. All the studies with a mixed-method design met 100% of the quality criteria assessed by the MMAT (#10, 11, 12) as well as two quantitative studies (#3, 5) and one qualitative study (#9).

The number of learners who were included in the studies varied from a minimum of n = 6 (#2) to a maximum of n = 220 (#8), covering an age range of 19–60 years from various educational backgrounds (both undergraduate and graduate learners). As for e-Learning experience, study #5 set some prior experience with MOOCs’ as a requirement for participation. Aside from this study, only study #8 explicitly mentioned that their undergraduate participants completed an introductory six months computer literacy course. Unfortunately, a third of the studies did not report on the participants’ prior e-Learning knowledge or experience.

Definitions of the different outcome variables: The concept of learners’ perceptions, success and engagement varied across studies thus necessitating a closer look at the operational definitions employed by the authors.

Learners’ success. This outcome variable was mainly defined as knowledge improvement or students’ achievement measured via oral or written examination results (#1,4, 12).

Learners’ engagement. Similar to learners’ success, this outcome variable was clearly operationalised via objective, quantitative statistics such as usage and completion of learning modules, materials and activities as well as participation in discussions or discussion boards measured via log-based assessment (#2), participation rate (#11) as well as reviews of discussion boards (#8).

Figure 1. PRISMA 2020 flow diagram for new systematic reviews which included searches of databases and registers only

From: Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. (2021). The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ, 372(71). doi:10.1136/bmj.n71

|

Author (Year): |

Study Characteristics |

|||||||

|

Study design |

Country |

Participants’ Characteristics |

Outcome variables |

|||||

|

No. |

Age in years (M/SD, range) |

Educational background |

Prior knowledge in e-learning? |

learners’ perceptions (satisfaction, experience, confidence, feelings), success or engagement |

||||

|

Quantitative Research |

||||||||

|

quantitative non-randomised |

||||||||

|

1 |

Hemans-Henry |

non-randomised controlled trial (3/5) |

USA |

142 |

/ |

graduate |

/ |

success, |

|

quantitative descriptive |

||||||||

|

2 |

Ayoob et al. |

survey (3/5) |

USA |

6 |

/ |

post-graduate |

/ |

perceptions, |

|

3 |

Buthelezi & van Wyk |

survey (5/5) |

South Africa |

60 |

21–60 |

post-graduate |

mixed |

perceptions |

|

4 |

Camargo et al. |

survey (3/5) |

Brazil |

76 |

19–44 |

under- and graduate |

/ |

success |

|

5 |

Dai et al. |

survey (5/5) |

China |

160 |

19.07 |

under-graduate |

yes |

perceptions |

|

6 |

Gray et al. |

survey (4/5) |

USA |

98 |

/ |

graduate |

/ |

perceptions |

|

7 |

McGrath & Thompson |

survey (4/5) |

Australia |

42 |

21–50 |

post-graduate |

mixed |

perceptions |

|

Qualitative Research |

||||||||

|

8 |

Bharuthram & Kies |

qualitative description (3/5) |

South Africa |

220 |

/ |

under-graduate |

yes |

perceptions, |

|

9 |

Rogo & Portillo |

case study (5/5) |

USA |

17 |

/ |

under- and graduate |

mixed |

perceptions |

|

Mixed Methods Studies |

||||||||

|

10 |

Dias & Diniz |

sequential explanatory (5/5) |

Portugal |

36 |

22.05, |

under-graduate |

mixed |

perceptions |

|

11 |

Schedlitzki et al. |

sequential explanatory (5/5) |

England |

20 |

28–45 |

post-graduate |

mixed |

perceptions, |

|

12 |

Uzzaman et al. |

convergent design (5/5) |

Bangladesh |

49 |

26–50 |

graduate |

mixed |

perceptions, |

|

*Note: MMAT score 5 = 100%; 4 = 80%; 3 = 60%; 2 = 40%; 1 = 20% |

||||||||

Table 2. Quality appraisal and characteristics of the studies included in this review

Learner’s perceptions. This construct included learners’ subjective feedback on how they experienced the e-Learning course, their perceived satisfaction, and their perceived strengths and challenges resulting in a judgement of the quality of the e-Learning course. The learners’ based their judgement on using either surveys (with closed and open-ended questions, #8; free-text comments, Likert scales and rated responses to closed statements, #3, 7), individual (semi-structured) interviews (#9, 10, 11) or focus group interviews (#12). The learners’ feedback in our sample set addressed three different dimensions of quality as conceptualised by Donabedian (1966):

-

Structural quality: the use and ease of the course (specifically the Learning Management System/LMS; #2, 3, 10, 11) as well as the available communication tools and collaborative and interactive tools (#2, 3, 10), and their confidence with e-Learning (#8).

-

Process quality: experiences that promote building and sustaining online learning communities (#9), sense of community (#7), intention to continue with studies (#5), experiences of peer and instructor feedback (#7), experiences and satisfaction with different delivery and teaching methods as well as instructional strategies (#2, 5, 6, 8, 10, 11, 12).

-

Outcome quality: perceived professional identity formation and confidence in theoretical and practical experience (#7) and satisfaction with their learning outcome (#5).

All courses described in the included studies were run by an institution of higher learning and were broadly classified by implementation modality: purely online (n = 5) and hybrid models (a combination of online plus some face-to-face sessions, n = 7). Appendix 2 details the course characteristics in relation to the study programme (professional discipline and course topic), organisation of the course, teaching and delivery methods, and assessment forms, as summarised below.

Study Programme (Professional discipline): The dominant profession area in our review is the medical field (e.g., nursing, radiology) with seven studies in total (four purely online: #1, 2, 3, 4; and three hybrid studies: #6, 9, 12). However, a considerable proportion of studies address other healthcare professions in which clinical reasoning and case management are key competencies, such as psychology (one study purely online, #5), therapeutic professions (two hybrid: #7, 10) and other healthcare-related professions (two hybrid: #8, 11).

Organisation: As for the degree of obligation, elective courses (n = 4) were almost without exception offered purely online, asynchronously conducted courses (#1, 2, 5, except: #12), whereby one such course represents a MOOC; #5). The hybrid models were largely mandatory, curricula-based courses, with one exception: study #12 evaluated a facultative, stand-alone hybrid course for general practitioners. The length of the courses varied tremendously, particularly with regard to the purely online courses: starting with a minimum of 40–45 minutes and four weeks for completion (#1, 4) up to one to three hours per week over nine weeks (#5) with the longest being a full academic year (#2). The hybrid courses were planned mainly over one academic term (#6, 9, 12; frequency and workload in total not further specified). There are no signs of a predominantly used learning management system, neither for purely online courses nor hybrid models.

Teaching and delivery methods: In our sample three distinct methods were identified: problem-based learning (online: #1, 4; hybrid: #6, 9), an interactive learning approach (online: #2; hybrid: #8, 11), and ‘content loaded’ technology (#5). Information on the teaching method was not reported in four papers. There was no evidence of a correlation between the learning approach and the profession or course topic. Yet, it seems noteworthy that study #5 is the only study that meets the purely online, asynchronously delivered course description, and is also the only one that did not incorporate a problem- or case-based approach.

Regarding the delivery methods, all but one of the purely online delivered courses were designed as asynchronous, self-paced learning modules, with no interaction with peers and/or instructors (#1, 2, 4, 5). Study #3 was also conducted in a solely asynchronous mode, but still ensured interaction with both peers and instructors via discussion boards. Another study used synchronous face-to-face sessions during which learners interacted online as they learned to use e-Learning discussion boards (#8). The remaining six courses combined asynchronous and synchronous sessions where interaction with peers and instructors was guaranteed and required. However, how asynchronous and synchronous sessions were conducted varied greatly, particularly across the hybrid models.

Study #9 for instance, evaluated a predominantly asynchronous online study programme, which started with a blended learning orientation week on campus where the learners got to know each other in person and were made familiar with the use of- and interaction within the learning management system, followed by a second campus visit in the middle of the programme. Similarly, in study #12 a one day long face-to-face seminar was organised as a kick-off event, followed by asynchronous online lessons with learning objectives posted weekly by the lecturer, and the course ended with another face-to-face seminar over the course of two days. As for a kick-off event, study #11 described a two-day long online discussion activity in advance of the asynchronous activities in order to encourage communication between learners within their blended learning course. A more regular traditional blended learning approach was seen in study #6, with an evaluation of a flipped classroom design with alternating asynchronous online pre-classroom activities and face-to-face classroom activities. In summary, it is note-worthy that the majority of the courses in our sample (n = 8) ensured some form of interaction between both peers and instructors. Despite the variation in the ways of interaction, there seemed to be a slight overweight in favour of written communication using forms of online discussion boards (#3, 8, 9, 11, 12).

As for the materials, formats and media used within the courses, once again, different combinations of methods were used in the six studies that reported on this. Yet, in line with what has been highlighted above, different types of forum discussions, (structured) discussion boards, discussion questions, Twitter chats or informal postings seem to have been one of the favourite didactic methods, particularly within in the hybrid programmes (#6, 8, 9, 11, 12, and also in one purely online programme: #3). Furthermore, clinical videos, case studies or case-based exercises played an important role and were implemented in half of the courses in both the purely online and hybrid models (n = 6; purely online: #1, 4, 5; hybrid: #6, 7, 11). In addition to these methods, the following were also found more than once: Quizzes and self-assessments (purely online: #2, 5; hybrid: #11, 12); expert lectures and interviews (purely online: #4, 5; hybrid: #11); online reading and reading assignments (hybrid: #9, 12); and presentation of information (e.g., via video slides with audio explanatory script, purely online: #1; hybrid: #6). Formats such as peer review activities (hybrid: #9), group projects and presentations (hybrid: #9) as well as electronic flashcards (purely online: #2) were only mentioned in one study respectively.

Forms of Assessment: As for the assessments conducted in order to complete a course, there is a clear tendency towards multiple-choice questions (MCQ) and quizzes in the purely online, asynchronously delivered courses (#1, 2, 4, 5), while participation and performance evaluation in (classroom) discussions or oral examinations were preferred for most of the hybrid models (#6, 8, 9, 12). Writing a critical reflective summary (#11) and project-oriented (group) work (#9) were also reported within the hybrid courses. Yet, it is noticeable that even in asynchronous, purely online courses, the authors mentioned assessment of higher-order thinking skills such as clinical reasoning and application of knowledge to clinical cases, using (case-based) MCQs and open-ended tests (e.g., #1, 4). Basically, all different levels of Bloom’s (1956) taxonomy were evident in the assessments mentioned in our sample.

The results are presented according to three outcome variables: learners’ success, engagement and perceptions using the dimensions of structure quality, process quality and outcome quality defined earlier.

Learners’ success: Based on our sample (n = 3), both pure online and hybrid learning seem promising in terms of improving learners’ knowledge. Both #1 and #4 report a significant increase in knowledge following a pure online self-study course of approximately 40–45 min duration without personal interaction, when assessed via partially case-based MCQs and open questions. Study #1 also highlighted that the online learners scored even higher than resident physicians with one more year of practical experience who did not take the online course. Study #4 showed that when using a self-study learning DVD, graduate learners performed better than undergraduate learners. The courses described in both study #1 and #4 used either a problem-based learning approach (#4) or case-based exercises (#1). Similar positive results were reported for a hybrid course of 40 hours (#12), including personal interaction with peers and instructors. The hybrid group achieved similar final grades for their performance when compared to a face-to-face group.

Learners’ engagement: High student engagement was reported for their self-paced, interactive learning modules (defined by completion rate) (#2) despite no interaction with either peers or instructors. Notably, at the beginning and end of the study period, engagement was particularly high. Study #11 also reports a higher engagement for the interactive module compared to an information sharing site within the learners’ LMS, used in the asynchronous part of their blended-learning course. Study #8 took a more nuanced perspective on engagement by searching for potential influencing factors. It was found that learners from advantaged backgrounds and with more technology experience showed greater engagement in the online activities.

Learners’ perceptions:

-

Structure quality: In terms of the time frame of the course, learner feedback was positive across different formats, ranging from a purely online delivered self-study course of 45 min over 28 days up to a more intense course of 40 hours over 24 days conducted in a hybrid model. Learners described both these courses as appropriate in length or amount of information and compatible for studying and working (#1, 11). As for the use and ease of the course (the LMS environment in particular), study #3 found a significant association between age and familiarity, and reported that older learners experienced greater difficulties in accessing and using the LMS in their purely online provided course. Apart from that, the LMS used in two purely online courses (Moodle) was generally rated positive (#1, 3). Differentiated feedback was given for hybrid courses (#8, 10, 12), pointing out strengths and weaknesses in the use of the LMS chosen for the online part of the course. On the one hand, weaknesses mentioned by undergraduate learners only related to the lack of ICT knowledge of both learners and instructors, regarding the tools available on the LMS (#10) and dissatisfaction with some technical demands, leading to low confidence in e-Learning (#8). On the other hand, study #10 reported that the functionality of LMS Moodle as a content repository was perceived as a strength, as was the degree of interactive tools incorporated into the LMS and the communication tools available for an instructor-student interaction. Similarly, study #11 reported that the learners were satisfied with both an interactive Blackboard Site as well as an informational sharing site on Blackboard, mainly used as a content repository. The learners did not prefer one over the other. In study #10, three significant factors influenced the structure quality when incorporating e-Learning elements, namely the degree of the LMS interactivity, the instructors’ ICT knowledge, and the learners’ training.

-

Process quality: Regarding general experiences and satisfaction with different delivery and teaching methods as well as instructional strategies, most feedback came from hybrid courses (#6, 7, 10, 11, 12). Despite a lack of motivation for the online part and online reading being perceived as uncomfortable (#12), positive feelings and feelings of convenience towards a hybrid learning approach were reported (#11, 12). Compared to other settings (face-to-face or purely online), the learners preferred the hybrid approaches (e.g., flipped classroom) regardless of a higher expenditure of preparation time (#6, 12). Especially the participants’ engagement in (case) discussions in class (#6) with the option for real-time feedback (#12) was perceived as a strength (sufficient facilitation skills for guiding case discussion provided, #6). Learners’ regard direct face-to-face feedback as important (#7), and, the lack of this real-time feedback during online discussions was seen as a disadvantage within the hybrid courses (e.g., #12, conducted in private Facebook groups). Similarly, different forms of interaction are viewed as cornerstones for building and sustaining online learning communities (#9). As for programme design, the inclusion of a week-long ‘blended’ on-campus visit and a second seminar visit in the middle of the programme which allows real-time interaction and feedback, was considered essential. Faculty interaction with learners on a regular basis as well as learner interaction displaying sensitive and respectful communication were two other important features. As for the course design of the online part, weekly discussions, collaborative activities (with students being actively engaged to think critically), communication via small groups and opportunities for informal conversation were key for a well-received e-Learning course by undergraduate and graduate learners alike (#9). In study #7, the learners developed a similar sense of community in a blended learning approach as those in traditional on campus settings.

Nonetheless, courses without any form of interaction were also perceived positively: 80% of the students preferred the 45 min pure e-Learning module to the in-person or blended learning approaches (#1). Taking a deeper look at pure online courses, study #5 analysed potential determinants influencing the intention to continue a MOOC module. The researchers found that the dissonance between initial expectations and the actual use experience influenced the level of satisfaction, which in turn shaped the participants’ attitudes, and the participants’ attitudes towards their curiosity to learn determined their continuance intention.

-

Outcome quality: The learners’ perceptions regarding the quality of the course’s outcome were generally positive – for asynchronous, purely online delivered courses (#1, 5, 7) as well as for hybrid models (#2, 6). The learners reported high satisfaction (#2) and rated the different courses they attended as a useful resource and relevant for clinical use (pure online: #1, 2; hybrid: #6). Learners developed similar confidence in applying theory to practice in a blended learning setting than in traditional settings on campus (#7). For settings with synchronous classroom activities in particular, the learners reported that their understanding improved and that especially the synchronous face-to-face case discussions were perceived as enhancing the learners’ learning experience in their flipped classroom environment (#6).

The included studies represented a heterogeneous learners’ body with more studies including graduate-level participants. Elective courses were almost exclusively found within purely online asynchronously conducted courses, while hybrid models seem to have been predominantly mandatory, curricula-based courses. This may lead to the assumption that purely online courses are not (yet) implemented as an integral part of a curriculum in the same form as hybrid models are, but rather valued as an ‘add-on’, allowing for even more flexibility in length, content and learning outcomes. Problem-based and interactive learning approaches were predominantly used across implementation modalities. Regarding learning evaluation, elements of Bloom’s (1956) taxonomy were identified in the assessments used in our sample set (e.g., remember, understand, apply, analysis, evaluate; create). There was a clear tendency towards MCQs and quizzes in the purely online, asynchronously delivered courses and participation and performance evaluation in (classroom) discussions or oral examination for most of the hybrid models. All of these are recognised and reliable methods to assess clinical reasoning skills needed by health professionals.

The majority of the studies focused on evaluating the learners’ perceptions and few data could be extracted for the other outcome variables. Based on the available data, both purely online and hybrid learning seem promising in terms of improving the learners’ knowledge, when incorporating practical or case-based exercises. Students also seemed to be sufficiently engaged by interactive learning modules whereby advantaged backgrounds and more technology experience seem to be potentially positive influencing factors. Generally, the learners’ perceptions in terms of structure, process, and outcome quality were predominantly positive, reinforcing the advantages of online learning that have already been highlighted in the literature. Given the variability across studies in terms of course design and participant characteristics, it is clear that there is no “one-size-fits-all” approach for e-Learning. However, consideration of how adult learners view success in e-Learning healthcare courses can provide valuable insights that can improve learning outcomes and experiences. Based on the findings of our review, the following key insights (related to course structure, process, and outcome quality) may inform the design of purely online or hybrid e-Learning courses in health education:

-

The LMS environment (structure quality): a) An LMS environment should incorporate interactive and communication tools for instructor-student interaction to a high extent as well as be able to act as a content repository (i.e., store large amounts of data) and b) Both learners and instructors need to have sufficient knowledge and acquaintance with technology in order to use the LMS environment’s full potential, which is in line with other research (e.g., Ardito et al., 2006; Johnson et al., 2018; Palmer and Holt, 2009). Otherwise, the quality of the process and outcome might be affected negatively.

-

Interaction and feedback (process and outcome quality): The importance of direct (informal) interaction and real-time feedback for learners on a regular basis with and from both the instructor and the peer group was highlighted in most studies. This interaction helped to build and sustain online learning communities and develop learners’ knowledge and confidence in applying theory to practice which fits the assumptions by Fredericks (2011), for instance. This was also confirmed by Minnaar (2011) who stated that direct communication with instructors and peers as well as human contact were a crucial need for e-Learners. The ways the studies implemented interaction varied greatly. For example, some studies incorporated interaction through discussion boards or social media groups, others through synchronous learning activities. Face-to-face meetings may not always be possible due to geographic or resource constraints. However, findings from our review indicate that even a few in-person meetings at the beginning of a course may encourage later interaction in asynchronous learning phases. It might even be of secondary importance if the interaction is face-to-face or online as long as the meeting takes place synchronously with the option of real-time feedback. McGinley et al. (2012), for example, evaluated how graduate students in special education perceive face-to-face and online learning with the result of a more positive perception towards synchronous classroom discussions in the online group compared to traditional face-to-face-learners, even though their discussions took place online. They also report the use of increased higher-level thinking skills and similar final grades for their performance in comparison to fully face-to-face groups. A combination of synchronous and asynchronous learning phases, delivered purely online, would open up the opportunity for international student collaboration and high-quality continuance professional development and yet still take into account the request of real-time engagement. Nevertheless, sufficient facilitation skills of the instructor in order to guide group discussions are necessary.

-

Initial expectations on process and outcome quality: In order to ensure high satisfaction with an e-Learning course, it seems to be important that the initial expectations and the actual experience of a learner match (#5). One way to do so would be to ensure high applicability of the content to practice since this seems to be what learners in healthcare professions expect. Another way could be to outline the course content and the intended learning outcomes clearly beforehand in order to create realistic expectations before starting the e-Learning course.

The data set was relatively small, with considerable variability regarding the information that was included in the published studies. For example, many studies did not differentiate between the content and interface designer, or did not specify whether the courses were offered free of charge, included in general study fees or required additional fees or did not specify learning outcomes in a transparent manner. This unavailable information led to the exclusion of potentially interesting studies.

Furthermore, the outcome variables varied greatly across studies which made operational definitions of key concepts necessary, e.g., the learning approach or learner outcomes (such as success, engagement or satisfaction). Despite our attempts to achieve this, other researchers might come to different conclusions. Furthermore, the three quality dimensions influence each other and can only be separated in theory. Notably an appropriate infrastructure seems to be a pre-condition for a high-quality process and thus, the outcome of learning. Many of the studies used surveys/interviews and social desirability is a known limitation to these methods, therefore outcomes may be skewed more favourably than in reality. This might have influenced our interpretation of the studies.

It is recommended that further studies investigate the process quality of pure online courses in other therapeutic disciplines (e.g., speech-language, occupational or physiotherapy) and also evaluate mandatory self-paced online modules in a curriculum-based programme or in continuing professional development courses. There was substantial variability in the time frames for the purely online and hybrid course and additional inquiry is necessary to determine how course length impacts objective and subjective outcomes. In addition, there was almost no feedback provided regarding the process quality of purely online courses, which represents a research gap and warrants consideration when interpreting the results of this research. Furthermore, studies comparing online modules with synchronous versus asynchronous interaction as well as synchronous face-to-face interaction versus online interaction in therapeutic disciplines would yield valuable insights.

This review highlights best-practice guidelines for e-learning in healthcare. This is novel as there is a paucity of research focussed on best practices related to applying knowledge and clinical skills through a problem-based e-Learning approach. Clinical utility is an important component of courses in healthcare sciences, and integrating case-based exercises has proven to be effective. Our scoping review emphasized the value of establishing accurate initial expectations of the course as well as the critical role of selecting an LMS that is interactive, collaborative and that can act as a content repository. Ensuring real-time feedback and interaction was shown as a valuable didactic method, irrespective of whether it is in person or online, with a ‘getting-to-know-each-other’ activity at the beginning of the course. Finally, our scoping review demonstrated that MCQs can easily be implemented in different e-Learning formats and can be effectively employed to assess higher-order skills.

Al-hawari, M.; Al-halabi, S.: The preliminary investigation of the factors that influence the e-learning adoption in higher education institutes: Jordan case study. In: International Journal of Distance Education Technologies, 8(4), 2010, pp. 1-11. http://doi.org/10.4018/jdet.2010100101 (last check: 2023-07-26)

Aliyu, M. B.: Efficiency of Boolean search strings for information retrieval. In: American Journal of Engineering Research, 6 (11), 2017, pp. 216-222. https://www.ajer.org/papers/v6(11)/ZA0611216222.pdf (last check 2023-07-26)

Al-Samarraie, H.; Teng, B. K.; Alzahrani, A. I.; Alalwan, N.: E-learning continuance satisfaction in higher education: a unified perspective from instructors and students. In: Studies in Higher Education, 43(11), 2018, pp. 2003-2019. https://doi.org/10.1080/03075079.2017.1298088 (last check: 2023-07-26)

Ameen, N.; Willis, R.; Abdullah, M. N.; Shah, M.: Towards the successful integration of e-learning systems in higher education in Iraq: a student perspective. In: British Journal of Educational Technology, 50(3), 2019, pp. 1434-1446. https://doi.org/10.1111/bjet.12651 (last check 2023-07-26)

Ardito, C.; Costabile, M. F.; De Marsico, M.; Lanzilotti, R.; Levialdi, S.; Roselli, T.; Rossano, V.: An approach to usability evaluation of e-learning applications. In: Universal Access in the Information Society, 4(3), 2006, pp. 270-283. https://doi.org/10.1007/s10209-005-0008-6 (last check 2023-07-26)

*Ayoob, A.; Hardy, P.; Waits, T.; Brooks, M.: Development of a web-based curriculum to prepare diagnostic radiology residents during post-graduate year 1 by promoting learning via spaced repetition and interim testing: if you build it, will they come? In: Academic Radiology, 26(8), 2019, pp. 1112-1117. https://doi.org/10.1016/j.acra.2019.02.011 (last check 2023-07-26)

Baker, W. J.; Pittaway, S.: The application of a student engagement framework to the teaching of music education in an e-learning context in one Australian University. In: Proceedings, 4th Paris International Conference on Education, Economy and Society, Paris, France, 2012, pp. 27-38. https://analytrics.org/wp-content/uploads/2021/03/EES_Actes_Proceedings_2012_2ndEd.pdf (last check 2023-06-27)

*Bharuthram, S.; Kies, C.: Introducing e-learning in a South African higher education institution: challenges arising from an intervention and possible responses. In: British Journal of Educational Technology, 44(3), 2013, pp. 410-420. https://doi.org/10.1111/j.1467-8535.2012.01307.x (last check 2023-06-27)

Bloom, B. S.; Krathwohl, David R.; Masia, Bertram B.: Taxonomy of educational objectives. Handbook 1: the cognitive domain, 20(24). McKay, New York, 1956.

*Buthelezi, L. I.; van Wyk, J. M.: The use of an online learning management system by postgraduate nursing students at a selected higher educational institution in KwaZulu-Natal, South Africa. In: African Journal of Health Professions Education, 12(4), 2020, pp. 211-214. https://doi.org/10.7196/AJHPE.2020.v12i4.1391 (last check 2023-06-27)

*Camargo, L. B..; Raggio, D. P.; Bonacina, C. F.; Wen, C. L.; Medeiros Mendes, F.; Strazzeri Bönecker, M. J.; Haddad, A. E.: Proposal of e-learning strategy to teach Atraumatic Restorative Treatment (ART) to undergraduate and graduate students. In: BioMed Central Research Notes, 7 (456), 2014. http://www.biomedcentral.com/1756-0500/7/456 (last check 2023-06-27)

Cole, M. T.; Shelley, D. J.; Swartz, L. B.: Online instruction , e-learning, and student satisfaction: a three year study. In: The International Journal of Research in Open and Distance Learning, 15(6), 2014, pp. 111-131. https://doi.org/10.19173/irrodl.v15i6.1748 (last check 2023-06-27)

Cooke, A.; Smith, D.; Booth, A.: Beyond PICO: the SPIDER tool for qualitative evidence synthesis. In: Qualitative Health Research, 22(10), 2012, pp. 1435-1443. https://doi.org/10.1177/1049732312452938 (last check 2023-06-27)

*Dai, H. M.; Teo, T.; Rappa, N. A.; Huang, F.: Explaining Chinese university students’ continuance learning intention in the MOOC setting: a modified expectation confirmation model perspective. In: Computer & Education, 150, 2020, pp. 103850. https://doi.org/10.1016/j.compedu.2020.103850 (last check 2023-06-27)

*Dias, S. B.; Diniz, J. A.: Towards an enhanced learning management system for blended learning in higher education incorporating distinct learners’ profiles. In: Journal of Educational Technology & Society, 17(1), 2014, pp. 307-319. https://www.j-ets.net/collection/published-issues/17_1 (last check 2023-07-26)

Dollaghan, C. A.: The handbook for evidence-based practice in communication disorders. P. H. Brookes Publishing, Baltimore, Md., 2007.

Donabedian, A.: Evaluating the quality of medical care. In: The Milbank Memorial Fund Quarterly, 44(3), 1966, pp. 166-206. https://doi.org/10.2307/3348969 (last check 2023-07-26)

Dos Santos, L. M.: I want to become a registered nurse as a non-traditional, returning, evening, and adult student in a community college: a study of career-changing nursing students. In: International Journal of Environmental Research and Public Health, 17(16), 2020, pp. 5652. https://doi.org/10.3390/ijerph17165652 (last check 2023-07-26)

Fontaine, G.; Cossette, S.; Maheu-Cadotte, M. A.; Mailhot, T.; Deschênes, M. F.; Mathieu-Dupuis, G.; Côté, J.; Gagnon, M. P.; Dubé, V.: Efficacy of adaptive e-learning for health professionals and students: a systematic review and meta-analysis. In: BMJ Open, 9(8), 2019, pp. 1-17. https://doi.org/10.1136/bmjopen-2018-025252 (last check 2023-07-26)

Gerhardus, A.; Munko, T.; Kolip, P.; Müller, M.: Einführung: Lernen und Lehren in den Gesundheitswissenschaften. In: Gerhardus, A.; Kolip, P.; Munko, T.; Schilling, L.; Schlingmann; K. (Ed.): Lernen und Lehren in den Gesundheitswissenschaften, 2020, pp. 13-24. Hogrefe, Bern, 2020. https://elibrary.hogrefe.com/book/10.1024/85930-000 (last check 2023-07-26)

*Gray, M. M.; Dadiz, R.; Izatt, S.; Gillam-Krakauer, M.; Carbajal, M. M.; Falck, A. J.; Bonachea, E. M.; Johnston, L. C.; Karpen, H.; Vasquez, M. M.; Chess, P. R.; French, H.: Value, strengths, and challenges of e-learning modules paired with the flipped classroom for graduate medical education: a survey from the national neonatology curriculum. In: American Journal of Perinatology, 38(S 01), 2021, e187-e192. https://doi.org/10.1055/s-0040-1709145 (last check 2023-07-26)

*Hemans-Henry, C.; Greene, C. M.; Koppaka, R.: Integrating public health–oriented e-learning into graduate medical education. In: American Journal of Preventative Medicine, 42(6), 2012, pp. S103-S106. https://www.sciencedirect.com/science/article/abs/pii/S0749379712002450 (last check 2023-07-26)

Hong, Q. N.; Pluye, P.; Fàbregues S.; Bartlett, G.; Boardman, F.; Cargo, M.; Dagenais, P.; Gagnon, M. P.; Griffiths, F.; Nicolau, B.; O’Cathain, A.; Rousseau, M. C.; Vedel, I.: Mixed Methods Appraisal Tool (MMAT), version 2018. Registration of Copyright (#1148552), Canadian Intellectual Property Office, Industry Canada, 2018. https://bmjopen.bmj.com/content/bmjopen/11/2/e039246/DC3/embed/inline-supplementary-material-3.pdf?download=true (last check 2023-07-26)

Johnson, E.; Morwane, R.; Dada, S.; Pretorius, G.; Lotriet, M.: Adult learners’ perspectives on their engagement in a hybrid learning postgraduate programme. In: The Journal of Continuing Higher Education, 66(2), 2018, pp. 88-105. https://doi.org/10.1080/07377363.2018.1469071 (last check 2023-07-26)

Kleinman, S.: Strategies for encouraging active learning,interaction, and academic integrity in online courses. In: Communication Teacher, 19(1), 2005, pp. 13-18. https://doi.org/10.1080/1740462042000339212 (last check 2023-07-26)

Kuo, Y. C.; Belland, B. R.: An exploratory study of adult learners' perceptions of online learning: Minority students in continuing education. In: Educational Technology Research & Development, 64(4), 2016, pp. 661-680. https://doi.org/10.1007/s11423-016-9442-9 (last check 2023-07-26)

McGinley, V.; Osgood, J.; Kenney, J.: Exploring graduate students’ perceptual differences of face-to-face and online learning. In: The Quarterly Review of Distance Education, 13(3), 2012, pp. 177-182. https://www.learntechlib.org/p/131999/ (last check 2023-07-26)

*McGrath, T.; Thompson, G. A.: The impact of blended learning on professional identity formation for post-graduate music therapy students. In: Australian Journal of Music Therapy, 29, 2018, pp. 36-61.

Minnaar, A.: Students support in elearning courses in higher education- insight from a metasynthesis “A pedagogy of panic attacks”. In: Africa Education Review, 8(3), 2011, pp. 483-503. https://doi.org/10.1080/18146627.2011.618664 (last check 2023-07-26)

Moawad, R. A.: Online learning during the COVID-19 pandemic and academic stress in university students. Romanian Journal for Multidimensional Education, 12(1), 2020, pp. 100-107. https://doi.org/10.18662/rrem/12.1sup2/252 (last check 2023-07-26)

Muirhead, R. J.: E-Learning: is this teaching at students or teaching with students? In: Nursing Forum, 42(4), 2007, pp. 178-184. https://onlinelibrary.wiley.com/doi/10.1111/j.1744-6198.2007.00085.x (last check 2023-07-26)

Munn, Z.; Peters, M. D. J.; Stern, C.; Tufanaru, C.; McArthur, A.; Aromataris, E.: Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Medical Research Methodology, 18(143), 2018. https://doi.org/10.1186/s12874-018-0611-x (last check 2023-07-26)

Ouyang, F.; Li, X.; Sun, D.; Jiao, P.; Yao, J.: Learners’ discussion patterns, perceptions, and preferences in a Chinese massive open online course (MOOC). In: International Review of Research in Open and Distributed Learning, 21(3), 2020, pp. 264-284. https://doi.org/10.19173/irrodl.v21i3.4771 (last check 2023-07-26)

Ouzzani, M.; Hammady, H.; Fedorowicz, Z.; Elmagarmid, A.: Rayyan — a web and mobile app for systematic reviews. In: Systematic Reviews, 5, 2016 ; (210). https://doi.org/10.1186/s13643-016-0384-4 (last check 2023-07-26)

Page, M. J.; McKenzie, J. E.; Bossuyt, P. M.; Boutron, I.; Hoffmann, T. C.; Mulrow, C. D.; Shamseer, L.; Tetzlaff, J. M.; Akl. E. A.; Brennan, S. E.; Chou, R.; Glanville, J.; Grimshaw, J. M.; Hrobjartsson, A.; Lalu, M. M.; Li, T.; Loder, E. W.; Mayo-Wilson, E.; McDonald, S.; …; Moher, D.: The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. In: BMJ, 372(71), 2021. https://doi.org/10.1136/bmj.n71 (last check 2023-07-26)

Palmer, S. R.; Holt, D. M.: Examining student satisfaction with wholly online learning. In: Journal of Computer Assisted Learning, 25(2), 2009, pp. 101-113. https://doi.org/10.1111/j.1365-2729.2008.00294.x (last check 2023-07-26)

Renes, S. L.; Strange, A. T.: Using technology to enhance higher education. In: Innovative Higher Education, 36(3), 2011, pp. 203-213. https://doi.org/10.1007/s10755-010-9167-3 (last check 2023-07-26)

Rodrigues, H.; Almeida, F.; Figueiredo, V.; Lopes, S. L.: Tracking e-learning through published papers: A systematic review. In: Computers & Education, 136, 2019, pp. 87-98. https://doi.org/10.1016/j.compedu.2019.03.007 (last check 2023-07-26)

*Rogo, E. J.; Portillo, K. M.: Building online learning communities in a graduate dental hygiene program. In: The Journal of Dental Hygiene, 88(4), 2014, pp. 213-228. https://jdh.adha.org/content/88/4/213 (last check 2023-07-26)

*Schedlitzki, D.; Young, P.; Moule, P.: Student experiences and views of two different blended learning models within a part-time post-graduate programme. In: International Journal of Management Education, 9(3), 2011, pp. 37-48.

Spanjers, I. A. E.; Könings, K. D.; Leppink, J.; Verstegen, D. M. L.; de Jong, N.; Czabanowska, K.; van Merriënboer, J. J. G.: The promised land of blended learning: quizzes as a moderator. In: Educational Research Review, 15, 2015, pp. 59-74. https://doi.org/10.1016/j.edurev.2015.05.001 (last check 2023-07-26)

Syauqi, K.; Munadi, S.; Triyono, M. B.: Students’ perceptions toward vocational education on online learning during the COVID-19 pandemic. In: International Journal of Evaluation and Research in Education, 9(4), 2020, pp. 881-886. https://ijere.iaescore.com/index.php/IJERE/article/view/20766 (last check 2023-07-26)

Tricco, A. C.; Lillie, E.; Zarin, W.; O'Brien, K. K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M. D. J.; Horsley, T.; Weeks, L.; Hempel, S.; Akl, E. A.; Chang, C.; McGowan, J.; Steward, L.; Hartling, L.; Aldcroft, A.; Wilson, M. G.; Garritty, C.; … Straus, S. E.: PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. In: Annals of Internal Medicine, 169(7), 2018, pp. 467-473. https://doi.org/10.7326/M18-0850 (last check 2023-07-26)

*Uzzaman, M. N.; Jackson, T.; Uddin, A.; Rowa-Dewar, N.; Chisti, M. J.; Habib, G. M. M.; Pinnock, H.; Campbell, H.; Cunningham, S.; Fletcher, M.; Grant, L.; Juvekar, S.; Lee, W. P.; Morris, A.; Luz, S.; Mahmood, H.; Sheikh, A.; Simpson, C.; Soofi, S. B.; Yusuf, O.: Continuing professional education for general practitioners on chronic obstructive pulmonary disease: feasibility of a blended learning approach in Bangladesh. In: BMC Family Practice, 21(203), 2020. https://doi.org/10.1186/s12875-020-01270-2 (last check 2023-07-26)

Vaona, A.; Banzi, R.; Kwag, K. H.; Rigon, G.; Cereda, D.; Pecoraro, V.; Tramacere, I.; Moja, L.: E-learning for health professionals. In: Cochrane Database of Systematic Reviews, 2018. https://doi.org/10.1002/14651858.CD011736.pub2 (last check 2023-07-26)

Woodley, L. K.; Lewallen, L. P.: Acculturating into nursing for Hispanic/Latinx baccalaureate nursing students: a secondary data analysis. In: Nursing Education Perspectives, 41(4), 2020, pp. 235-240. https://doi.org/10.1097/01.NEP.0000000000000627 (last check 2023-07-26)

|

Item No. |

Item |

Explanation/Coding Rule (if necessary) |

|

Study Characteristics |

||

|

1 |

Research Methodology |

1.Qualitative research 2.Quantitative randomised controlled trials 3.Quantitative non-randomised 4.Quantitative descriptive 5.Mixed methods studies |

|

2 |

Study Design |

According to the research methodology chosen above: 1. Qualitative research: Ethnography, phenomenology, narrative research, grounded theory, case study, qualitative description; 2. Quantitative randomised controlled trials: RCT; 3. Quantitative non-randomised: non-randomised controlled, cohort study, case-control study, cross-sectional analytic study; 4. Quantitative descriptive: incidence or prevalence study without comparison group, survey, case series, case report; and 5. Mixed methods studies: convergent design, sequential explanatory design, sequential exploratory design |

|

3 |

Country |

Country where the study was conducted |

|

4 |

Level of Quality (MMAT) |

5-1; 5*****: 100% quality criteria met, 4****: 80% quality criteria met, 3***: 60% quality criteria met, 2**: 40% quality criteria met, 1*: 20% quality criteria met. |

|

Participants Characteristics |

||

|

5 |

Number of Participants |

Before drop out |

|

6 |

Age |

In years, M/SD, range (if reported) |

|

7 |

Educational Background |

Undergraduate, graduate, postgraduate |

|

8 |

Prior Knowledge in e-Learning |

Yes/no |

|

Course Characteristics |

||

|

General Information: |

||

|

9 |

Curricula Based |

Is the course curricula-based vs. stand-alone product? |

|

10 |

Area of Profession |

Study programme, responsible faculty |

|

11 |

Course Topic |

|

|

Organisation: |

||

|

12 |

Time Frame & Workload |

In total and per week (if reported) |

|

13 |

LMS |

Learning Management System/e-Learning platform used, name if reported |

|

14 |

Degree of Obligation |

Mandatory vs. Facultative |

|

15 |

Fee Basis |

Free of charge or fee based? |

|

16 |

Technical Support* |

Was technical support available or not? |

|

17 |

Course/Instructional Designer* |

Specifies who was in charge of the design and the implementation of the course |

|

Teaching & Delivery Methods |

||

|

18 |

Learning Approach |

Inductive category formation |

|

19 |

Course/Instructional Designer* |

Synchronous, asynchronous, combined |

|

20 |

Mode |

Purely online (all sessions online), hybrid: face-to-face/in person plus online (no restriction to the frequency) |

|

21 |

Modality |

Interaction with peers and/or instructor reported |

|

22 |

Didactic Methods |

All materials, formats, media used and reported, verbatim |

|

23 |

Transparency of Learning Outcomes |

Yes/no |

|

24 |

Assessment of Learning |

Verbatim |

|

25 |

Type of Assessment |

|

|

26 |

Bloom’s Taxonomy* |

Knowledge/remember, comprehension/understand, application/apply, synthesis/analyse; evaluation/evaluate; create |

|

27 |

Time of Assessment |

Only before, only after/at the end, in between, a combination |

|

28 |

Tools* |

Verbatim |

|

Evaluation of Students’ Success, Engagement, Perceptions |

||

|

29 |

Students’ succes |

Included as an outcome variable? Yes or no |

|

30 |

Definition of the Outcome Variable ‘success’ |

Verbatim definition |

|

31 |

Method used for Data Collection |

|

|

32 |

Main Results |

Including potential factors influencing students’ success |

|

33 |

Students’ engagement: |

Included as an outcome variable? Yes or no |

|

34 |

Definition of the Outcome Variable ‘engagement’ |

Clearly operationalised via objective, quantitative statistics, verbatim definition |

|

35 |

Method(s) used for Data Collection |

|

|

36 |

Main Results |

Including potential factors influencing students’ engagement |

|

37 |

Students’ Perceptions: |

Included as an outcome variable? yes or no |

|

38 |

Definition of the Outcome Variable ‘perceptions’ |

All students’ subjective feedback on how they experienced the e-course, their perceived satisfaction, perceived strengths and challenges resulting in a judgement of the quality of the e-course; verbatim definition (inductive category formation afterwards regarding the quality dimensions) |

|

39 |

Method used for Data Collection |

|

|

40 |

Main Results |

Including potential factors influencing students’ success |

Appendix 2. Overview of the characteristics of the evaluated e-learning courses – Purely online models vs. Hybrid models

|

Modality: Hybrid: Online plus occasional to regular face to face-sessions (onsite, * incl. BL= Blended Learning: combination of on campus & online activities) |

|||||||||||

|

Author (Year): |

Course Characteristics |

Main results |

|||||||||

|

General Information |

Organisation |

(Online) Teaching & Delivery Methods |

Forms of Assess-ment |

Regarding Students’ Perceptions, Engagement, Success |

|||||||

|

Study Program/ Area of Profession |

Course Topic |

Degree of obliga-tion |

Time Frame & Workload |

Used LMS |

Learning Approach |

Mode |

Interaction peers/ instructor |

Didactic Methods |

|||

|

Bharuth-ram & Kies (2013)8 |

Multiple professions within Health Sciences |

HIV/AIDS |

mandatory |

3x weekly 60 min e-learning sessions at the end of a traditional face-to-face-term |

Know-ledge Environment for Web-Based Learning (Open Source) |

Interactive learning |

Syn-chronous (f2f while inter-acting online) |

Yes, with both (face-to-face& via dis-cussion boards) |

Computer onsite, discussion forums, library research tutorial |

EED portfolio showing personal contribu-tion on discussion boards |

Engagement: Students from advantaged backgrounds and with more technology experience and access showed greater engagement in the tasks |

|

Perceptions: Students were dissatisfied with some of the technical demands of the course and reported low confidence in e-learning |

|||||||||||

|

Dias & Diniz (2014)10 |

Human Kinectis |

Various (e.g., sport management & psychomotor rehabilitation) |

mandatory |

/ |

Moodle |

/ |

Combined (BL, not further specified) |

Yes, with both (via Moodle & class mail) |

Not specified/ various LMS tools available |

/ |

Perceptions: Strengths of LMS moodle: content repository, teacher-student interaction/communication; Weaknesses: lack of ICT knowledge by both students and teachers (tools available on the LMS); significant influencing factors: 1. degree of LMS interactivity/ interactive learning activities; 2. teachers ICT knowledge and attitude/beliefs (acquaintance); and 3. students training |

|

Gray et al. (2021)6 |

Physiology National Neo-natology Curriculum |

Respiration physiology |

mandatory |

over 1 academic term |

mededonthego. com |

Problem-based learning, Flipped classroom |

Combined |

Yes,with both, but only during synchronised. classroom activities |

Preclassroom activities: online video modules (with videos <10min: video slides and audio explanatory script), classroom activities: clinical case guide with patient scenarios and discussion questions |

Performance in classroom discussions |

Perceptions: 68% preferred FC approach compared to traditional didactics (although they spend more pre-class preparation time for FC). >90% agreed that their understanding improved, faculty facilitators were helpful & encouraged discussion, the case discussions enhanced their learning experience; Overall, learners endorsed more strengths than challenges. Perceived Strengths: relevance of content for clinical use, participants' engagement in class discussion. Perceived Challenges: insufficient facilitation skills for guiding case discussions |

|

McGrath& Thomp-son (2018)7 |

Music Therapy |

various within the study programme |

/ |

/ |

/ |

/ |

Combined (BL, not further specified) |

Yes, with both (not further specified) |

Online part: case studies, client's videos |

/ |

Perceptions: BL does not hinder professional identity formation (no significant differences between On Campus (OC) course and BL), they do develop similar community sense and confidence in applying theory to practice as in traditional OC settings, the study results highlight: importance of direct face-to-face feedback |

|

Rogo & Portillo (2014)9 |

Online Graduate Dental Hygiene Program |

Various (e.g., special needs populations, advanced dental hygiene theory) |

Mandatory |

Over one academic term |

Online forum not further specified |

Problembased learning |

Combined (BL orientation week, asynchronous online course, 2nd campus visit in between) |

Yes, with both (via various activities) |

Informal postings, group activities and projects, peer review activities |

Transparent partici-pation evaluation, 2–3 projects for summative assessment |

Perceptions: Most important features were…: 1.Program design: the week long “blended” on-campus visit required for orientation and the 2nd graduate seminar visit in the middle of the program; 2. Course design: weekly discussions, collaborative activities (with students being actively engaged to think critically), communication via small groups, opportunities for informal conversation; 3. Faculty: Faculty interaction with learners on a regular basis; and 4. Learner: Learner interaction displaying sensitive and respectful communication. |

|

Sched-litzki et al. (2011)11 |

Health & Social Care |

Programme in leadership & management for social care services |

/ |

over one academic term |

Blackboard wiki website |

Interactive learning |

Combined (BL, online: 2 synchr. sessions followed by asynchronous activities) |

Yes, with both, mainly via dis-cussion boards |

Interactive site on Blackboard: discussion boards, quizzes, video interviews, self-assessments Information Sharing Site on Black-board |

Critical-Reflective Summary (at the end of a module) |

Engagement: Students engaged more with interactive module than informational module |

|

Perceptions: Students did not show preference for the interactive Blackboard Site compared to an informational learning module; most students reported positive feelings toward blended learning approach |

|||||||||||

|

Uzzaman et al. (2020)12 |

General Practitioners |

Chronic Obstructive Pulmonary Disease |

elective |

40 h over 24 days (16 h online, 3 days @ 8h f2f) |

/ |

/ |

Combined (1st and last 2 days f2f, in between asyn-chronous online course) |

Yes, with both via private Face-book group and on f2f-days |

Online reading, chapter quizzes, discussions via a private Facebook group, practical f2f classes |

Pre & Post: COPD-PPAQ (question-naire) Post: oral exam, MCQ |

Success: End-of-course examination scores were similar between both the hybrid group and the fully f2f-group for knowledge and skills. Overall, participants’ self-reported adherence to COPD guidelines was improved. |

|

Perception: Hybrid model felt more convenient than f2f or purely online models. Advantages: Option for feedback during f2f, compatibility of studying and work. Disadvantages: reading online was uncomfortable, lack of motivation for online part, lack of real-time feedback during online discussions |

|||||||||||