“uniMatchUp!”: An application for promoting academic help-seeking and group development

urn:nbn:de:0009-5-55437

Abstract

This paper describes the conception, development, and evaluation of a peer support web application for university students. The main goal of uniMatchUp! is to help students in finding appropriate academic support and learning groups by providing Group Awareness (GA) information about various aspects of fellow students that are useful for online learning. The study contributes to a better understanding of the use of GA tools in the university context and reveals that active engagement with the application, in the form of contributed questions and answers, is positively associated with study satisfaction. During the interaction with uniMatchUp!, cognitive GA information (contribution quality) was considered more relevant than behavioral (amount of participation), and emotional (well-being) GA information about other students. The findings also provide potentials for improvement, which can shape the further development of uniMatchUp! and future applications.

Keywords: e-learning; peer support application; academic help-seeking; group awareness tools

The motivation for uniMatchUp! was the exceptional situation caused by the COVID-19 pandemic, which led to new demands for university students. From the summer semester 2020 to the summer semester 2021, university life has largely taken place virtually at many universities, which reveals opportunities and challenges that may have an impact on university life far beyond the pandemic period. Learning material is mostly provided digitally, which increases individual flexibility from home but reduces the chance of personal exchange with other appropriate fellow students when questions and problems arise. Peers are one of the preferred resources for support (Atik & Yalçin, 2011). Relevant information about fellow students such as prior knowledge or the availability of potential learning partners can hardly be estimated – especially at the beginning of the academic career and with spatial distance. Although the original idea for uniMatchUp! stems from an issue situation, it also offers many opportunities for computer-supported teaching and learning in the context of higher education. The overarching goal of uniMatchUp! is to support students in their (partially) digital studies during the pandemic crisis and generally during their studies. The main target group of uniMatchUp! are university students in their first academic year. Usually during this initial study phase essential contacts are made, which enable joint learning and mutual support and thus also may have a positive influence on learning success. Therefore, as part of the nationwide hackathon Wir hacken das digitale Sommersemester (#Semesterhack), a concept for the peer support web application uniMatchUp! has been developed to address the challenges described above and to support university students in self-regulated but socially integrated learning. This concept builds on extensive preliminary work by the authors’ research group and beyond, such as research on different types of Group Awareness (Bodemer & Dehler, 2011; Bodemer, Janssen, & Schnaubert, 2018; Ollesch, Heimbuch, & Bodemer, 2019), research on social embeddedness (Schlusche, Schnaubert, & Bodemer, 2021), and research on Group Awareness tools for academic help-seeking (Schlusche, Schnaubert, & Bodemer, 2019). It was one of the winner projects within the Hackathon. Subsequently, the development of the first version of uniMatchUp! and its evaluation was funded by the Federal Ministry of Education and Research from September to December 2020.

Group Awareness (GA) is the central construct of the application, which is known as the perception of social contextual information in a group such as group members’ knowledge, activities, or feelings (Bodemer et al., 2018). Particularly for the case of the university entry, research has recently linked GA tools to academic help-seeking, assuming improved decisions on potential helpers with GA information and, subsequently, higher academic success (Schlusche et al., 2019; 2021). GA tools enable an improved assessment of learning partners by collecting, transforming, and presenting such contextual information, that is difficult to perceive in online learning scenarios (Bodemer & Dehler, 2011). The project at hand pursued two GA-related goals: First, the development of an intuitively usable mobile and web-enabled application that supports university students with GA information. Second, the evaluation of how students make use of such GA information in practice. uniMatchUp! is designed to help students to ask targeted questions and find long-term study partners or groups. Of course, apps and concepts already exist that try to automatically or autonomous match learning partners through GA information. The Peer Education Diagnostic and Learning Environment (PEDALE) by Konert et al. (2012), e.g., proposes to match learners through an automatic algorithm based on personal variables like their knowledge level or age, after taking part in a knowledge test assessment. This should ensure that heterogeneous rather than homogeneous learning groups are formed to optimize the knowledge exchange in the peer feedback process. The apps PeerWise (Denny, Luxton-Reilly, & Hamer, 2008) and PeerSpace (Dong, Li, & Untch, 2011) take a rather non-automated matching approach. Behavioral and cognitive GA information, such as participation points and upvoting and downvoting of contributions, are leading types of information here to support the self-driven matching in the respective peer-support networks. Even though such existing apps in the learning sector already use GA information to connect learners, this awareness information is usually rudimentary and not visualized combined and detailed in relation to the individual learner. Moreover, emotional GA variables, which can be very important for maintaining a positive and constructive group climate (Ollesch, Venohr, & Bodemer, 2022), are not considered in these existing app concepts. In addition, the concepts presented in academia and practice are context-dependent, rather than being a comprehensive solution for building serious learning relationships and supporting students' everyday learning, especially in the introductory phase of study, which the uniMatchUp! application aims to provide. Therefore, even though there are comparable applications, which consider student characteristics in building peer support networks, to the authors’ knowledge, there is no usable application that presents individual and group level cognitive, behavioral, and especially emotional GA information in combination to adequately support students in their peer matching and learning process.

Using GA tools for academic contexts is promising, since those tools have shown in studies to be helpful in terms of partner selection, learning processes, and outcomes (Bodemer et al., 2018). GA tools can present various information, such as information about other learners’ knowledge (cognitive), activities (behavioral), or emotions (emotional), which goes along with different effects (Ollesch et al., 2019). Cognitive GA tools support grounding and partner modeling processes, which facilitates the adaptation to the learning partners’ skills (Bodemer & Dehler, 2011). Behavioral GA tools have the potential to trigger social comparison processes and increase group members’ motivation to participate (Kimmerle & Cress, 2008). Emotional GA tools show positive effects on emotional outcomes by improving emotion understanding (Eligio, Ainsworth, & Crook, 2012). The combination of these three types of GA information may help to better assess fellow students before their selection and thus adapt to specific characteristics of learning partners in subsequent communication processes (Ollesch et al., 2019). It is assumed that the combined presentation of different types of GA information facilitates academic help-seeking (Schlusche et al., 2019). This might be particularly the case for phases of online teaching, lacking immediate face-to-face contact. In this way, GA support potentially also has a positive impact on academic success (e.g., study satisfaction, intention to drop out, and grades) (Algharaibeh, 2020; Schlusche et al., 2021). Furthermore, supporting such processes is seen as instrumental for improving students’ social connectedness, which enables self-regulated student matching (Schlusche et al., 2021; Wilcox, Winn, & Fyvie‐Gauld, 2005). This leads to the first research questions:

-

RQ1: To what extent is the interaction with a peer support application that presents three types of GA information related to an increase of (a) academic success and (b) social connectedness?

The joint presentation of different GA information can be helpful to shape students’ choices based on their own preferences (Schlusche et al., 2019). However, the effects of cognitive, behavioral, and emotional GA information are often studied separately (Ollesch et al., 2019). This does not allow for conclusions about subjective preferences. For a peer support application to be adopted in everyday life, it is essential to know and include the acceptance and desires of the target group or students. Therefore, three types of GA information will be integrated in the context of uniMatchUp! to investigate the relevance of cognitive, behavioral, and emotional GA information in the selection of fellow students (Ollesch, Heimbuch, Krajewski, Weisenberger, & Bodemer, 2020). Based on these considerations, the following second research question is posed:

-

RQ2: How relevant are cognitive, behavioral, and emotional group awareness attributes for students in the digital selection of fellow students?

Moreover, it is important to ensure that both the matching of students and the subsequent communication are as intuitive as possible. Findings on the optimal design and use of GA information in a university context are still lacking. Therefore, in the following study, different functions for private and public exchange at individual and group level are implemented. These functions are evaluated to answer the third research question:

-

RQ3: How should a peer support application be designed to facilitate finding suitable learning partners and communicating with each other?

uniMatchUp! is a responsive web application that can be accessed using common browsers and is programmed in Python, based on the web framework Django . Furthermore, the Spark Responsive Admin Template of Bootstrap 4 is used to build the user interface. To design the frontend web pages, HTML and CSS were used. JavaScript is applied for client-side form validation. The database is a PostgreSQL database. Cloudinary is a SaaS provider which is applied to manage the media (here: various files like text documents, PDF files, and images). The TinyMCE editor is used to allow students to format their input appropriately.

In uniMatchUp! university students register with their university email addresses to ensure that only students at the University of Duisburg-Essen could register for the first test phase. As usernames, nicknames were chosen to reduce the barrier to entry for questions. The application is intended to support users in three scenarios: matching help-seekers with helpers for concrete questions relevant for the short term in 1) a public forum or a 2) private 1:1 exchange, as well as matching longer-term 3) learning groups. These three functions and the respective implementation of GA information are described below.

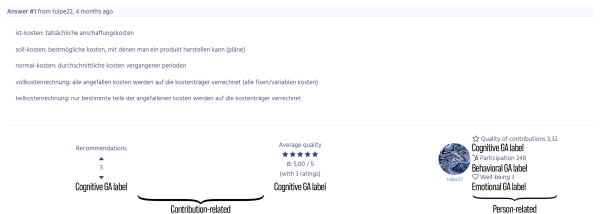

The focus of the public forum function lies on the content-related forums for each offered lecture (Vorlesungsspezifische Foren). In those forums, one can exchange about questions and study contents with all fellow students and thus profit from the collective knowledge of others. There are also forums for other concerns – a forum to get to know each other (Kennenlernbörse) and a forum for organizational concerns (Organisationsforum). Through this way, students can get in contact with each other and thus increase social connectedness. In the public forum, additional GA information is provided on several levels. This information should help to better assess potential learning partners and to enable the adaptation to such learning partners (Bodemer et al., 2018; Ollesch et al., 2019). On contribution level, there is the possibility to recommend answers to questions (cognitive GA, Empfehlungen), and to rate the contribution quality of answers more fine-grained on a 5-star Likert scale (cognitive GA, Durchschnittliche Qualität). A sample answer and the corresponding rating options are visualized in Fig. 1, left side (no real username is displayed here).

Fig. 1: Example excerpt from the forum with two cognitive GA labels on the contribution level on the left and three different types of GA information on the personal level on the right

Based on the contribution-related GA information, a person-related average quality value (cognitive GA, Qualität der Beiträge) is formed for all written answers in the forum and private help-seeking function (see section 2.2), which is displayed next to the icon of the user in the public forum but also in the respective user profile to facilitate skill assessment (see Bodemer & Dehler, 2011). On the behavioral level, a participation counter (behavioral GA, Partizipation) tracks and visualizes the number of asked questions, given answers, and ratings of other users’ contributions in the whole application to increase participatory motivation (Kimmerle & Cress, 2008). Once one of the actions is performed, the counter increases by one. Well-being in the network of the students (emotional GA, Wohlbefinden) is subjectively self-assessed by students on a 5-star Likert scale (1 = "very low well-being", 5 = "very high well-being") and can be changed at any time to trigger emotion co-regulation processes (Eligio et al., 2012). In summary, all GA information on personal level is adapted continuously, some adaptations are conditioned on interactions with the system (participation), others adaptable by the users (quality of contributions and well-being). For a sample user with assessed GA information, see Fig. 1, right side.

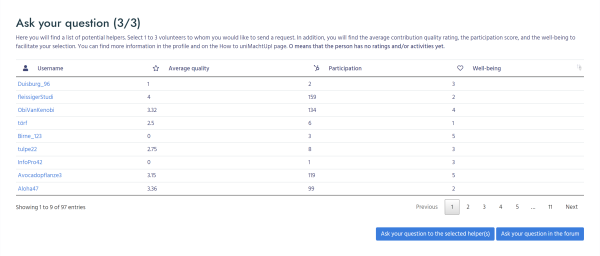

In uniMatchUp! students can also post questions privately to a selection of up to three potential helpers. To do this, the users 1) specify the course topic, 2) write a question title, and an explaining question text. The help-seekers can also assign tags (keywords) to a question for better categorization. Optionally, file attachments can be added. After specifying the question, a 3) selection of all students who have taken the university course is displayed along with the same three person-related GA information of the public forum (see Fig. 2). The selection interface can be sorted by these GA attributes. The private help-seeking function is based on a multi-level HTML form, in which AJAX requests are sent to the server at the appropriate levels to transfer data and dynamically display the response without reloading the entire page to improve performance and usability of the web application.

To ensure that the help seekers can select their potential helpers based on preferred GA information, at least one student with a low, medium, or high level of all three types of GA information is presented to them. These nine potential helpers in total are displayed in random order on the first page of the list. Regarding the technical background, to enable a distributed and random presentation, a list for the respective course is created for each GA information containing the user ID and the respective GA information value. The lists are then sorted according to the GA information values so that it is possible to derive the respective values from the position in the list (higher value corresponds to a higher position in the list). To achieve an evenly distributed selection, each list is divided into three sub-lists by using terciles. The boundaries of the terciles are calculated by multiplying the list length by one-third or two-thirds.

After the sub-lists have been created, one user is randomly selected from the range of each of the resulting nine sub-lists, whereby it is also checked whether this user has already been selected as a potential helper based on another type of GA information. Through this calculation, the selection of potential helpers is based on the actual range of values of the respective GA information of all users within the respective course. This leads to a high diversity of recommended helpers with different GA information values (low, medium, high), not only containing users who are high or low in all GA information values.

After the nine users displayed on the first page of the help-seeking-interface have been calculated, selected, and randomized in their display order, all other unselected users from the respective course are also appended to the final list. This allows the help-seeker to explore through all potential helpers by sorting the list according to their own (GA) preferences.

If a help-seeker selects helper(s) to send them a question, these will be notified by the system through a pop-up message. It then allows helpers to either accept the question or to reject it. If the question is accepted, a chat is opened between the help-seeker and helper where they can exchange information and clarify the respective question. One of the challenges with uniMatchUp! is the real-time data transfer to ensure chat functionality. Technically, this was solved by web sockets. In contrast to a classical request response sequence, after which the server has no further information about the state, web sockets enable a bidirectional data exchange. A persistent connection is established between the client and the server, which also allows the server to initiate a message exchange (Liu & Sun, 2012).

Once the question has been answered from the questioner’s perspective and the interaction has ended, the question can be closed by the questioner and will be automatically deleted from the other potential helpers. After that, the helper’s contribution quality can be rated, analogous to the average quality star rating of forum contributions (see Fig. 1, Durchschnittliche Qualität). This is offset against the other quality ratings from the private help-seeking and public forum. Resolved questions are archived, which allows users to revisit older conversations and access the documented knowledge.

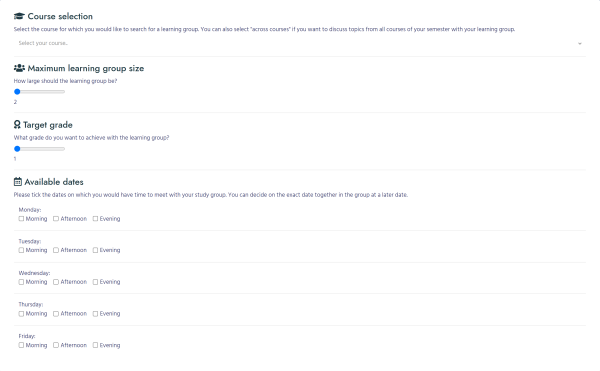

The goal of the learning group search is to allow university students to get to know each other through a group chat and share content related to a specific course, i.e., to form a group. Forming is the first stage in Tuckman and Jensen’s (1977) revised life cycle of group development (Forming, Storming, Norming, Performing, and Adjourning) where efforts should be made to ensure the effectiveness and efficiency of the following group working process (Bonebright 2010). This is also the phase that is supported in the uniMatchUp! application by the provision of GA information to help to get to know each other and to secure membership of the group. When students self-select groups, they often form purely homogeneous groups; a balanced group composition is not guaranteed (Johnson & Johnson, 1991; Zheng & Pinkwart 2014). In this way, the chance for high-quality learning and positive peer relationships is reduced (Gruenfeld, Mannix, Williams, & Neale, 1996). Even though homogeneous groups might be more harmonious sometimes, often it is profitable to combine students with complementary cognitive abilities to achieve higher performances (Johnson & Johnson, 1991; Manske, Hecking, Hoppe, Chounta, & Werneburg, 2015). In uniMatchUp! to make the selection of groups indiscriminate, but still retain some autonomy, an algorithm was developed that suggests groups based on heterogeneous competencies (quality ratings) and homogeneous preferences, but still allows free selection (see also Konert, Burlak, & Steinmetz, 2014). Even the methods used in uniMatchUp! come from the group formation area (e.g., heterogeneity and homogeneity metrics), they still allow users to decide for themselves whether to join a recommended group in the presented solution. Partially heterogeneous grouping may be more beneficial here than pure homogeneous grouping to avoid clusters with students that have only low-quality values and to encourage diversity in groups. Furthermore, to find an existing group or to create a new one, users can also specify several preferred attributes that include information about the desired maximum group size, expected grade, and available dates (see Fig. 3). Based on this information, uniMatchUp! searches for existing groups that best meet the defined criteria. The group results are then displayed according to a recommender system. This suggests mixed groups based on the heterogeneous cognitive GA quality label (i.e., users with high, moderate, and low average contribution quality) and homogeneous preferences (group size, grades, and dates) – in descending order of relevance for the learning group seeker. In the context of uniMatchUp!, heterogeneity and homogeneity are not operationalized via concrete threshold values. Whether a group is considered particularly heterogeneous or homogeneous depends on the attributes of the learning group seeker, as well as all available group alternatives.

The proposed approach is based on the optimization of a defined relevance value. Here, the term “relevance” describes a calculated measure that illustrates how well existing learning groups match the search query and is given in a similarity interval [0;1]. By means of pairwise comparisons, the attributes of the person searching are compared with those of the possible groups already established. Regarding the contribution quality rankings, the higher the distance of persons within a group is for the respective university subjects, the more heterogeneous and relevant it is regarding this attribute. For all other attributes, the deviation is also calculated, but here a minimum deviation, i.e., a high similarity, is assigned a high relevance value. This approach follows the principle of "maximizing average satisfaction" (Christensen & Schiaffino, 2011). The average preference is commonly calculated for each potential group candidate here and used as a basis for the selection of groups. The resulting values are then compared with the preferences of the aggregated users in the existing group profile to determine a group recommendation (Christensen & Schiaffino, 2011). The used recommender algorithm is explained in more detail in the following.

Fig. 3: Example excerpt of the creation page for a learning group at which the criteria for the group recommendation are determined (group size, grades, and dates)

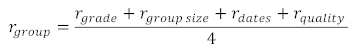

The overall relevance rgroup is derived from the average of the relevance of the three homogeneous attributes as well as heterogeneous quality composition, which is illustrated in the following formula:

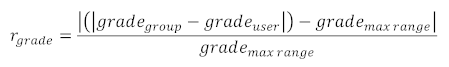

To be more specific about the subfacets, the relevance rgrade is based on the similarity between the expected grade of the learning group (mean value) and the user. To calculate a relevance value in the range [0;1], first the amount of this difference is calculated and then its percentage deviation from the maximum distance between two grades, is derived. The smaller this difference is, the higher is the percentage deviation from the maximum difference, and thus the calculated relevance value rgrade increases. This calculation can be expressed with the following formula:

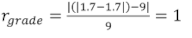

In this case, the respective grade values have been mapped and derived from the German university grading system to a scale of absolute numbers with identical distances (1.0≙1, 1.3≙2, 1.7≙3, 2.0≙4, 2.3≙5, 2.7≙6, 3.0≙7, 3.3≙8, 3.7≙9, and 4.0≙10). The variable grademax range describes the maximum possible difference between two mapped grade values (10-1=9). This serves for a uniform calculation of the similarity. Suppose a group’s grade size preference is 1.7 and the requesting user aims for the same grade, this would lead to a maximum relevance value of

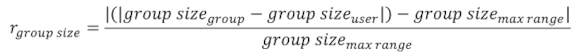

The relevance rgroup size is calculated similarly to rgrade, although no mapping as described above is performed here, since the values of the attribute group size already consist of absolute numbers. The following formula is derived from this:

In uniMatchUp! the minimum group size is 2 and the maximum group size is 5. It follows that the maximum difference between the user and the group (group sizemax range) is 3.

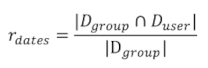

The calculation of the relevance rdates is based on the number of specified dates matching between the group and user. The more group’s dates fit the user’s dates, the higher the probability that common learning dates can be arranged and thus the relevance of rdates increases. The cardinality of a set, i.e., the number of all contained elements, is used for the calculation:

Here, the set Dgroup contains the intersection of all existing group members' date selections and Duser contains all dates specified by the potential new user.

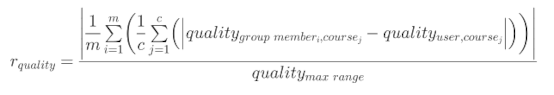

In contrast to the search attributes specified by the user (grade, group size, and dates), rquality describes a heterogeneous variable, based on the average quality ratings that the users have received from their fellow students for their contributions. This means that more divergent values should lead to an increased relevance value, i.e., the more the cognitive GA information value (contribution quality) of one person in a specific course differs from that of another person, the better they would potentially complement each other. Since a learning group can be used to discuss the various contents of all courses, all matching courses by the group participants and seeker are included in this calculation. Therefore, for each group member, the algorithm calculates how much their average quality ratings for each of the matching courses differ from the requesting user's values. Since rquality describes how strongly the user can complement all individual group members, the average of all relevance values of the individual group members is calculated, leading to the following formula:

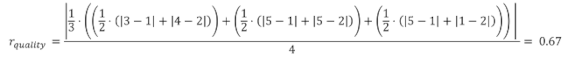

Here, m is the number of all group members and c is the number of all common courses between the requesting user and the respective group member. An example of a user request to a group of three is illustrated in Tab. 1.

|

Person |

Quality course 1 |

Quality course 2 |

|

User |

1 |

2 |

|

Group member 1 |

3 |

4 |

|

Group member 2 |

5 |

5 |

|

Group member 3 |

5 |

1 |

Tab. 1: Quality ratings for two common courses. Note that these represent average values and therefore decimal numbers are also possible.

Given the example values and a maximum rating range of 4, this would lead to a relevance value of:

After the average of all four relevance values is formed according to the above-mentioned formula rgroup, the groups are recommended in descending order (higher relevance values first). In the overview of already existing groups, users can then join as a member. For each of the groups, it is also possible to display the GA information about the individual group members.

N = 101 German university students (M age = 20.14, SD = 3.99, 17 – 51 years) were recruited for the study via university lectures and social networking sites. Of these, 66.3% were Economics students, 14.9% were Business Education students, and 18.8% were Applied Computer Science students of the University of Duisburg-Essen in the first semester. Gender distribution was balanced between female (50.5 %) and male (46.5 %) participants, while there were 3% diverse participants. The students were informed that they would be testing a new web application that would be integrated into the structures of the University of Duisburg-Essen. Teachers did not take part in this test phase.

Regarding RQ1, GA was assessed with an adapted version of Mock’s awareness taxonomy on a 5-point Likert scale (Mock, 2017), ranging from 1 (“does not apply at all”) to 5 (“completely true”). Six items each asked for general awareness of the self about other students’ skills and relationships (Cronbach’s α = .82; e.g., “I have an overview of the topics the course participants are familiar with.”) as well as of other students about the self (α = .91; e.g., “The course participants have an overview of the topics I am familiar with.”), both estimated by the respective participants. Three different measures were assessed for academic success: Subjects were asked to indicate the 1) grades they expected to achieve in the respective study subjects, ranging from the usual grade levels of “1.0” to “> 4.0” as well as “I am not taking the course.” An overall mean value was calculated for all courses taken across the three study programs, weighted according to the achievable credit points. To measure 2) study satisfaction, two subscales of the short form of the Study Satisfaction Questionnaire (Westermann, Heise, & Spies, 2018) were used (α = .83; e.g., “Overall, I am satisfied with my current studies.”). Response options ranged from 0 (“the statement does not apply at all”) to 100 (“the statement applies completely”). To measure the 3) intention to drop out of the study program, one item by Fellenberg and Hannover (2006) (“I am seriously thinking of dropping out of university.”) was applied, ranging from 1 (“not at all true”) to 6 (“completely true”). Social connectedness was measured by three subscales of the assessment of social connectedness (α = .94; e.g., “I would like to have a larger circle of friends in my degree program.”) by van Bel, Smolders, IJsselsteijn, and De Kort (2009), which were to be estimated on a 7-point scale from 1 (“strongly disagree”) to 7 (“strongly agree”). To relate the variables to the interaction with uniMatchUp!, subjective duration of usage was asked after the interaction in hours. Moreover, the number of posed questions and answers were taken from the log data. With regard to RQ2, to indicate the relevance of different GA types, participants were asked to indicate how helpful (item 1; e.g., “How helpful did you find the displayed participation (of the user) in assessing the relevance of posts in the forum?”), important (item 2), and steering (item 3) the five GA attributes (see Fig. 1) were during the application interaction, ranging from 1 (“not at all”) to 6 (“very much”). Overall mean scores across those three items were generated. Addressing RQ3, participants were asked to assess the likelihood of using the whole application (item 1; e.g., “How likely is it that you will use the "uniMatchUp!" app in the future?”) as well as the public forum (item 2), private help-seeking (item 3), and learning group search (item 4) features. Response options ranged from 1 (“not at all likely”) to 5 (“very likely”). Also, user experience was tested using the User Experience Questionnaire (UEQ) (Laugwitz, Held, & Schrepp, 2008), ranging from -3 to 3, each having two opposite properties as poles (e.g., “boring” and “exciting”). According to Laugwitz and colleagues, values between -0.8 and 0.8 represent a neutral evaluation, values > 0.8 represent a positive evaluation.

Currently, participants' usernames within the application are anonymous at the beginning. Only when participants add each other as friends, they can see their real names. Therefore, the participants were asked if they would prefer to give their real names directly and to see the real names of other users. To ensure equal opportunities, anyone interested could register to use the application. However, only students who were in their first year of study in one of the target subjects (Economics, Business Education, and Applied Computer Science) could participate in the post-questionnaire. The application interaction phase started on 11/26/2020 and lasted about 2 ½ weeks. The post-questionnaire was provided from 12/13/2020 to 12/23/2020. A compensation of 25 euros as well as further raffles from 25 to 75 euros were provided to ensure that enough subjects were reached in a very short time, as the project came about during the pandemic and the authors therefore had to acquire at short notice. Additionally, ten out of the 101 participants declared their willingness to participate in a qualitative follow-up survey to substantiate their statements made in the questionnaire described above. Sample statements are presented in the Results section to illustrate the quantitative results. Even though only the official interaction phase was relevant for the study evaluation, uniMatchUp! may still be used afterwards.

To answer RQ1, in the first step, the extent was examined to which the variables/questionnaires included in the study were related to each other (see Tab. 2).

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

|

|

1. duration of use |

1 |

.23* |

.02 |

-.05 |

-.09 |

.18 |

.21* |

.21* |

|

2. questions/ answers |

1 |

.25* |

.08 |

-.09 |

.04 |

.16 |

.18 |

|

|

3. study satisfaction |

1 |

-.42** |

-.60** |

.10 |

.02 |

.01 |

||

|

4. grades |

1 |

.36** |

-.09 |

.02 |

-.11 |

|||

|

5. drop out intention |

1 |

-.16 |

-.08 |

-.05 |

||||

|

6. social connectedness |

1 |

.45** |

.45* |

|||||

|

7. GA (self) |

1 |

.64** |

||||||

|

8. GA (other) |

1 |

Tab. 2: Bivariate Correlations between interaction variables and those related to academic success, social connectedness, and GA. * p < .050, ** p < .010; N = 101, apart from grades (N = 100). Note that lower scores can be considered positive for grades and intention to drop out.

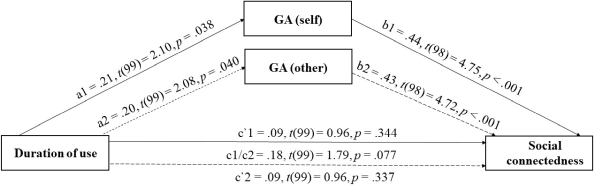

The results show that more active engagement with the application, in the form of questions and answers, was associated with a slightly higher study satisfaction, supporting RQ1a. The estimated overall usage time of the application was also slightly positively related to both GA dimensions, which in turn were moderately positively associated with feelings of social connectedness (see Tab. 2). Even though there is no direct correlation of estimated duration of use and social connectedness, it was exploratory examined if an effect potentially might be mediated by GA (see Schlusche et al., 2021). Therefore, in the second step, two mediation analyses were conducted with GA (self) and GA (other) as mediator variables each. Although the a-path as well as b-path were significant in both mediator analyses (see Fig. 4), the indirect effects did not become significant for both the GA perspective of the self about other students (indirect effect ab = .09, 95% CI [-0.23, 0.22]) nor the GA perspective of other participants about the self (indirect effect ab = .09, 95% CI[-0.19, 0.18]), with both confidence intervals including the 0. Related to social connectedness, RQ1b is only partially supported.

Concerning RQ2, a within-subject ANOVA with a Greenhouse-Geisser correction showed a significant difference between the relevance of GA information (F(3.25, 325.03) = 54.22, p < .001, η²p = .35). Bonferroni-adjusted post-hoc analysis revealed significant differences (p < .001) between all information labels, except for the three labels of cognitive GA information (see Tab. 3). This leads to an overall ranking of 1) cognitive GA (“[...] The quality shows how trustworthy the user appears.”), 2) behavioral GA (“[...] Based on participation, I see if a person is really willing to help others.”), and 3) emotional GA (“[...] Makes it possible to consciously improve the well-being of a person.”).

|

GA labels |

GA information |

M |

SD |

|

Average quality (contribution level) |

Cognitive GA |

4.40 |

1.14 |

|

Recommendations (contribution level) |

Cognitive GA |

4.33 |

1.31 |

|

Quality of contributions (personal level) |

Cognitive GA |

4.28 |

1.06 |

|

Participation (personal level) |

Behavioral GA |

3.54 |

1.07 |

|

Well-being (personal level) |

Emotional GA |

2.63 |

1.25 |

Tab. 3: Descriptive statistics of perceived relevance of the GA information labels (scale 1 to 6), see Fig. 1 as reference

With respect to RQ3, a within-subjects ANOVA with a Greenhouse-Geisser correction revealed a significant difference between the likelihood of using the three main functions public forum, private help-seeking, and learning group search (F(1.62, 162.06) = 14.13, p < .001, η²p = .12), see Tab. 4 (left side) for descriptive values.

|

Application (functions) |

M |

SD |

UEQ subscales |

M |

SD |

|

Whole application |

3.91 |

0.91 |

Attractiveness |

1.28 |

1.00 |

|

Public forum |

4.88 |

1.53 |

Perspicuity |

1.04 |

1.24 |

|

Learning group search |

4.55 |

1.22 |

Dependability |

1.02 |

0.92 |

|

Private help-seeking |

4.06 |

1.52 |

Stimulation |

0.94 |

1.13 |

|

Efficiency |

0.86 |

1.06 |

|||

|

Novelty |

0.71 |

1.11 |

Tab. 4: Descriptive statistics of likelihood of use (scale 1 to 5) and user experience (scale -3 to +3)

Bonferroni-adjusted post-hoc analysis revealed slightly significant differences (p < .050) between all three functions, with generally very high mean scores. Overall, the whole application performed very well, with a mean score close to 4. It was praised that “[…] interaction with other fellow students was super and, above all, better structured than in comparable communication services”. The public forum was the most popular function, because “[…] by allowing everyone to potentially be involved, you always get a quick response”. The learning group search was the second most popular feature. Here it was stated that “[…] it helps those people who currently have no other possibility to find a learning group”, which concerns especially students who do not live in the place of the university. Even though all main functions were rated extremely positively, the private help-seeking function was the worst ranked function, which was nevertheless liked “[…] due to the possibility of finding a concrete personal caregiver”. Regarding the UEQ questionnaire (see Tab. 4, right side), all subscales, except of novelty, achieved a pleasing and positive evaluation.

Regarding the preference for public usernames, 37 participants answered “Yes”, and 64 participants answered “No”. Supporters of public names gave as reasons for their judgement that public names create a more personal atmosphere (“One feels directly closer, anonymous names promote distance.”) and ensure the quality of communication (“Through anonymous communication, users are able to spread nonsense.”). Users who were against the direct use of public names gave as reasons that anonymous names have the advantage that learners cannot develop prejudices based on the name of a person (“In my opinion, you are not that biased if you don't know the name.”). The participants also stated that it is easier for shy learners to ask questions because of the given anonymity, as there might be less social anxiety (“Anonymity gives me more freedom to ask questions that may be viewed critically but cannot be directly linked to me.”).

The qualitative questions revealed several needs for improvement. The biggest shortcoming of the application is the current loading time (Google Page Speed Index ≈ 1.5s in Desktop version; ≈ 6.0s in Mobile version), which was rated as “[...] the most serious thing”. Moreover, issues were seen in the clarity, since “[...] the application contains many empty spaces and sometimes bulky information”. Especially the view of the mobile version seems to need improvement, “[...] as many elements are too small and unclear”. It was also desired to be able to “[...] favor or save posts so that you don’t always have to search for them” and “[...] to mark posts that have not yet been read or to receive notifications about new posted contributions to not lose track of them”, which is not implemented in the current version of the application.

The goal of this project was to develop an application that facilitates the students’ entry into the academic life, especially in the context of COVID-19, based on the use of GA information. uniMatchUp! was tested with respect to academic success as well as social connectedness (RQ1). Additionally, the relevance of different GA information (RQ2) and the acceptance of the application design were examined (RQ3). Even though only a few relationships between interaction intensity and GA with relevant dependent variables could be identified, there were positive tendencies focusing on study satisfaction as well as social connectedness. This is in line with research (Algharaibeh, 2020; Schlusche et al., 2021; Wilcox et al., 2005) and highlights the potential of using GA tools in the study course. In addition, it must be noted that in the first semester, a lot of influencing factors might have hindered more positive study results to occur – confounding variables that the authors could not control for all of them, such as a general wrong decision in the choice of a study program, increasing difficulty of the topics, or further supporting offers of the university. It became clear that the cognitive GA information seemed to be most relevant for the choice of a learning partner, while the emotional GA information seemed less appropriate, at least in the form the authors implemented it. In general, both the individual functions and the entire application as well as its user experience were evaluated very positively. However, there are also weaknesses and need for improvements that led to the following implications: 1) The emotional GA component (well-being) should be removed or reconceptualized at the individual personal level because of its low ranking and honest assessment cannot be assured. Alternatively, an overarching assessment of togetherness at the sub-forum or overall network level would be conceivable, but more research is needed to find adequate emotional GA support. 2) Caching should be refined as page load times are too high. 3) The mobile layout should be improved because the current display is not optimal. A native application could be discussed. 4) Notifications functions, such as email or push-up, should be implemented. 5) The basic implementation of all three core functionalities needs to be further developed.

One limitation of the study is that during the programming process, there was a delay of about two weeks, so that the start of the study was later in the semester than intended. This means that there is a chance that students have already connected via other platforms before using uniMatchUp!. Also, the study did not consider the possible negative side of GA tool use. Inter alia, the assessment of fellow students may increase the problem of outsiders and students left behind because their displayed GA information let them appear less attractive.

Currently, the relevance of a group recommendation in the learning group search is calculated in such a way that an individual similarity measure is used for each attribute and an average relevance value is derived as a result. A possible improvement is to combine and standardize the calculation of all attributes. Cosine measures would be suitable for this. This type of calculation is used, e.g., to calculate similarities between documents (Hoppe, 2020). In this case, one would create a vector for each group or search query that contains the respective attribute characteristics. By forming a scalar product of the group vector and the query vector, a uniform similarity measure could be calculated as a result (Hoppe, 2020). In addition, the previous time slots are very roughly formulated and do not allow clear times to be derived. To better align with the needs of students, it would make sense to use the time slots that are also used for university events. This would make it possible for students to specify time slots in which they have no events. Another implication for improvement is the possibility to indicate whether the learning group prefers to meet online or on site. The functionality could be extended with an assessment of the learning location. This could be the university or any city, which is especially relevant for commuters. In general, the list of preferences could be increased. The Index of Learning Styles questionnaire has proven to be a useful tool for group development, containing 44 questions (Felder & Soloman, 1991). Further attributes besides the quality of the ratings are summarized by Maqtary, Mohsen, and Bechkoum (2019). These include learning styles, personality traits, team roles, and social interaction patterns (various social skills such as participation). Further, such approaches might be enriched by using semantic technologies on the content level, analyzing learners’ projections or artifacts, to either support the grouping based on extracted knowledge diversity or to automize the extraction of cognitive GA information (Manske, 2020; Schulten, Manske, Langner-Thiele, & Hoppe, 2020). It might also be helpful for a better structuring of conversations within a group to actively integrate the results of the group algorithm and cognitive GA information into the learning scenario (see Erkens, Manske, Hoppe, & Bodemer, 2019).

In contrast to the recommendation of existing groups as carried out in the present application, there are also further approaches to group formation in existing research. Here, the idea is to automatically form an optimally matching heterogeneous, homogeneous, or mixed group from a set of individuals based on various attributes (Maqtary et al., 2019). The difficulty of group formation in the context of computer-supported collaborative learning is that the formation of the group must be based on different characteristics of the students. These characteristics, in turn, can come in various forms and shapes (homogeneous and heterogeneous) such as grades, self-evaluation, gender, and so on. To enable criteria evaluation, it is necessary to normalize the data so that effective comparison can take place. GroupAL is an algorithmic approach to group formation and quality assessment, which allows including multidimensional criteria that are homogeneously or heterogeneously expressed. Due to a normalized metric, a comparison of different group formations is possible (Konert et al., 2014). In addition, genetic algorithms and approaches also exist to group students with multiple attributes, which attempt to ensure that the group partitioning is fair and balanced. Genetic algorithms have already been successfully applied to optimize the automatic formation of mixed (including heterogeneous and homogeneous attributes) groups based on the student characteristics considered (Chen & Kuo, 2019). However, since in the university context learning groups can usually be formed and changed according to the needs of the students and are not always fixed once, these approaches may be too rigid for the use case described. They offer little freedom for the students in comparison to the group recommendation approach implemented by the authors.

With a focus on the private help-seeking function, it is currently a very high computational cost for asking a question as all students are filtered from a course in a request; every GA information is then calculated to split them into different lists, to sort them, and consequently to select different students from different parts of this list. One could save computational effort and thus calculation time by, e.g., creating such lists daily for each course and then only having to select randomly from the various sub-lists, i.e., to create a daily "cache". There is also the possibility that not all students, due to social or content-related uncertainty, want to be suggested as a potential helper. For this reason, a function could be introduced that allows students to decide for themselves whether they want to be displayed in the private help-seeking function for a particular subject. For future use, it makes sense not to calculate an average quality mean value but a weighted one regarding the actuality of the posts (the more recent, the more weight).

It should be noted at this point that the sample was limited to 101 subjects, and the study was conducted in a very short time span, including only three study programs. Also, there was no control condition, so that it cannot be clearly answered if the increase had been different without GA features. It is therefore planned to further develop and evaluate uniMatchUp! based on the results and to expand its use. Further field and experimental designs are planned that will reveal deeper insights into the effects of single and combined GA information, with a focus on emotional GA support and effects of user anonymity vs. recognition. Such attempts are promising, as the study results indicate that university students can benefit from the use of GA information in the university context. Furthermore, the findings show that such an application and its main function are accepted and desired.

This project was funded by the Federal Ministry of Education and Research under the funding code 16DHBQP036. The full code can be accessed here: https://git.uni-due.de/psychmeth/unimatchupapp . We thank Christian Schlusche, Ádám Szabó, Kirsten Wullenkord, Laura aus der Fünten, Laurent Demay, Yvonne Gehbauer, Hannah Krajewski, Simon Krukowski, Malte Kummetz, Franziska Lambertz, Hans-Luis Magin, Joshua Norden, and Cora Weisenberger for collaborating in the project.

Algharaibeh, S. A. S.: Should I ask for help? The role of motivation and help-seeking in students’ academic achievement: A path analysis model. In: Cypriot Journal of Educational Sciences, 15, 5, 2020, pp. 1128–1145. https://doi.org/10.18844/cjes.v15i5.5193 (last check 2022-08-11)

Atik, G.; Yalçin, Y.: Help-seeking attitudes of university students: The role of personality traits and demographic factors. In: South African Journal of Psychology, 41, 3, 2011, pp. 328–338. https://doi.org/10.1177/008124631104100307 (last check 2022-08-11)

Bodemer, D.; Dehler, J.: Group awareness in CSCL environments. In: Computers in Human Behavior, 27, 3, 2011, pp. 1043–1045. https://doi.org/10.1016/j.chb.2010.07.014 (last check 2022-08-11)

Bodemer, D.; Janssen, J.; Schnaubert, L.: Group awareness tools for computer-supported collaborative learning. In: Fischer, F.; Hmelo-Silver, C. E.; Goldman, S. R.; Reimann, P. (Eds.): International Handbook of the Learning Sciences, pp. 351–358. Routledge/Taylor & Francis, New York, NY, 2018.

Bonebright, D. A.: 40 years of storming: A historical review of Tuckman’s model of small group development. In: Human Resource Development International, 13, 1, 2010, pp. 111–120. https://doi.org/10.1080/13678861003589099 (last check 2022-08-11)

Chen, C. M.; Kuo, C. H.: An optimized group formation scheme to promote collaborative problem-based learning. In: Computers & Education, 133, 2019, pp. 94–115. https://doi.org/10.1016/j.compedu.2019.01.011 (last check 2022-08-11)

Christensen, I. A.; Schiaffino, S.: Entertainment recommender systems for group of users. In: Expert Systems with Applications, 38, 11, 2011, pp. 14127–14135. https://doi.org/10.1016/j.eswa.2011.04.221 (last check 2022-08-11)

Denny, P.; Luxton-Reilly, A.; Hamer, J.: The PeerWise system of student contributed assessment questions. In: Hamilton, S.; Hamilton, M. (Eds.): Proceedings of the 10th Conference on Australasian Computing Education. Wollongong: Australian Computer Society, Vol. 78, 2008, pp. 69–74. https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.296.754&rep=rep1&type=pdf (last check 2022-08-11)

Dong, Z.; Li, C.; Untch, R. H.: Build peer support network for CS2 students. In: Clincy, V. A.; Harbort, B. (Eds.): Proceedings of the 49th Annual Southeast Regional Conference. Kennesaw, GA: Association for Computing Machinery, 2011, pp. 42–47. https://doi.org/10.1145/2016039.2016058 (last check 2022-08-11)

Eligio, U. X.; Ainsworth, S. E.; Crook, C. K.: Emotion understanding and performance during computer-supported collaboration. In: Computers in Human Behavior, 28, 6, 2012, pp. 2046–2054. https://doi.org/10.1016/j.chb.2012.06.001 (last check 2022-08-11)

Erkens, M.; Manske, S.; Hoppe, H.U.; Bodemer, D.: Awareness of complementary knowledge in CSCL: Impact on learners’ knowledge exchange in small groups. In: Nakanishi, H.; Egi, H.; Chounta, IA.; Takada, H.; Ichimura, S.; Hoppe, U. (Eds.): Lecture Notes in Computer Science, Vol. 11677, Collaboration Technologies and Social Computing, 2019, pp. 3–16. https://doi.org/10.1007/978-3-030-28011-6_1 (last check 2022-08-11)

Felder, F. M.; Soloman, B. A.: Index of Learning Styles. North Carolina State University, 1991. https://www.webtools.ncsu.edu/learningstyles/ (last check 2022-08-11)

Fellenberg, F.; Hannover, B.: Kaum begonnen, schon zerronnen? Psychologische Ursachenfaktoren für die Neigung von Studienanfängern, das Studium abzubrechen oder das Fach zu wechseln [Barely started, already lost? Psychological causal factors for the tendency of first-year students to drop out of their studies or change subjects]. In: Empirische Pädagogik, 20, 4, 2006, pp. 381–399. Retrieved from https://www.fachportal-paedagogik.de/literatur/vollanzeige.html?FId=777445 (not available at 2022-08-11)

Gruenfeld, D. H.; Mannix, E. A.; Williams, K. Y.; Neale, M. A.: Group composition and decision making: How member familiarity and information distribution affect process and performance. In: Organizational Behavior and Human Decision Processes, 67, 1, 1996, pp. 1–15. https://doi.org/10.1006/obhd.1996.0061 (last check 2022-08-11)

Hoppe, T.: Grundlagen des Information Retrievals. In: Semantische Suche, pp. 63–107. Springer, Wiesbaden, 2020.

Johnson, D. W.; Johnson, P.: Joining together: Group theory and group skills (4th ed.). Prentice Hall, Englewood Cliffs, NJ, 1991.

Kimmerle, J.; Cress, U.: Group awareness and self-presentation in computer-supported information exchange. In: International Journal of Computer-Supported Collaborative Learning, 3, 1, 2008, pp. 85–97. https://doi.org/10.1007/s11412-007-9027-z (last check 2022-08-11)

Konert, J.; Burlak, D.; Steinmetz, R.: The group formation problem: An algorithmic approach to learning group formation. In: Proceeding of the 9th European Conference on Technology Enhanced Learning (EC-TEL), pp. 221–234. Springer, New York, 2014. https://doi.org/10.1007/978-3-319-11200-8_17 (last check 2022-08-11)

Konert, J.; Richter, K.; Mehm, F.; Göbel, S.; Bruder, R.; Steinmetz, R.: PEDALE–A peer education diagnostic and learning environment. In: Journal of Educational Technology & Society, 15, 4, 2012, pp. 27–38. https://www.jstor.org/stable/10.2307/jeductechsoci.15.4.27 (last check 2022-08-11)

Laugwitz, B.; Held, T.; Schrepp, M.: Construction and evaluation of a user experience questionnaire. In: Holzinger, A. (Ed.): HCI and Usability for Education and Work. Vol. 5298, pp. 63–76. Springer, Berlin, Heidelberg, 2008. https://link.springer.com/book/10.1007/978-3-540-89350-9 (last check 2022-08-11)

Liu, Q.; Sun, X.: Research of web real-time communication based on web socket. In: Journal of Communications, Network and System Sciences, 5, 12, 2012, 797–801. http://dx.doi.org/10.4236/ijcns.2012.512083 (last check 2022-08-11)

Manske, S.: Managing Knowledge Diversity in Computer-Supported Inquiry-Based Science Education. 2020. (Doctoral dissertation). https://duepublico2.uni-due.de/receive/duepublico_mods_00071585 (last check 2022-08-11)

Manske, S.; Hecking, T.; Hoppe, H. U.; Chounta, A.; Werneburg, S.: Using differences to make a difference: A study on heterogeneity of learning groups. In: Lindwall, O.; Häkkinen, P.; Koschmann, T.; Tchounikine, P.; Ludvigsen, S. (Eds.): Exploring the Material Conditions of Learning: The Computer Supported Collaborative Learning (CSCL) Conference 2015. Vol. 1, 2015, pp. 182–189. The International Society of the Learning Sciences, Gothenburg, 2015. https://stelar.edc.org/opportunities/cscl-2015-exploring-material-conditions-learning (last check 2022-08-11)

Maqtary, N.; Mohsen, A.; Bechkoum, K.: Group formation techniques in computer-supported collaborative learning: A systematic literature review. In: Technology, Knowledge and Learning, 24, 2, 2019, pp. 169–190. https://doi.org/10.1007/s10758-017-9332-1 (last check 2022-08-11)

Mock, A.: Open (ed) classroom–who cares? In: MedienPädagogik: Zeitschrift für Theorie und Praxis der Medienbildung, 28, 2017, pp. 57–65. https://doi.org/10.21240/mpaed/28/2017.02.26.X (last check 2022-08-11)

Ollesch, L.; Heimbuch, S.; Bodemer, D.: Towards an integrated framework of group awareness support for collaborative learning in social media. In: Chang, M.; So, H.-J.; Wong, L.-H.; Yu, F.-Y.; Shih, J. L. (Eds.): Proceedings of the 27th International Conference on Computers in Education. 2019, pp. 121–130. Taiwan: Asia-Pacific Society for Computers in Education, 2019. Corrigendum in DuEPublio2: https://doi.org/10.17185/duepublico/74884 (last check 2022-08-11)

Ollesch, L.; Heimbuch, S.; Krajewski, H.; Weisenberger, C.; Bodemer, D.: How students weight different types of group awareness attributes in wiki articles: A mixed-methods approach. In: Gresalfi, M.; Horn, I. S. (Eds.): The Interdisciplinarity of the Learning Sciences, 14th International Conference of the Learning Sciences (ICLS) 2020, Vol. 2, pp. 1157–1164. International Society of the Learning Sciences, Nashville, Tennessee, 2020. https://repository.isls.org/handle/1/6309 (last check 2022-08-17)

Ollesch, L.; Venohr, O.; Bodemer, D.: Implicit guidance in educational online collaboration: supporting highly qualitative and friendly knowledge exchange processes. In: Computers and Education Open, 3, 2022, Article 100064. https://doi.org/10.1016/j.caeo.2021.100064 (last check 2022-08-11)

Schlusche, C.; Schnaubert, L.; Bodemer, D.: 2 Group awareness information to support academic help-seeking. In: Chang, M.; So, H.-J.; Wong, L.-H.; Yu, F.-Y.; Shih, J. L. (Eds.): Proceedings of the 27th International Conference on Computers in Education, 2019, pp. 131–140. Taiwan: Asia-Pacific Society for Computers in Education, 2019. http://ilt.nutn.edu.tw/icce2019/04_Proceedings.html ilt.nutn.edu.tw/icce2019/dw/ICCE2019 Proceedings Volume I.pdf (last check 2022-08-11)

Schlusche, C.; Schnaubert, L.; Bodemer, D.: Perceived social resources affect help-seeking and academic outcomes in the initial phase of undergraduate studies. In: Frontiers in Education, 6, 2021, Article 732587. https://doi.org/10.3389/feduc.2021.732587 (last check 2022-08-11)

Schulten, C.; Manske, S.; Langner-Thiele, A.; Hoppe, H. U.: Digital value-adding chains in vocational education: Automatic keyword extraction from learning videos to provide learning resource recommendations. In: Alario-Hoyos, C.; Rodríguez-Triana, M.J.; Scheffel, M.; Arnedillo-Sánchez, I.; Dennerlein, S.M. (Eds): Addressing Global Challenges and Quality Education. EC-TEL 2020, pp. 15-29. Lecture Notes in Computer Science, Vol 12315. Springer, Cham, 2020. https://doi.org/10.1007/978-3-030-57717-9_2 (last check 2022-08-11)

Tuckman, B. W.; Jensen, M. A. C.: Stages of small-group development revisited. In: Group & Organization Studies, 2, 4, 1977, pp. 419–427. https://doi.org/10.1177/105960117700200404 (last check 2022-08-11)

Van Bel, D. T.; Smolders, K. C.; IJsselsteijn, W. A.; De Kort, Y. A. W.: Social connectedness: Concept and measurement. In: Callaghan, V.; Kameas, A.; Reyes, A.; Royo, D.; Weber, M. (Eds.): Proceedings of the 5th International Conference on Intelligent Environments, Vol. 2, 2009, pp. 67–74. IOS Press, Amsterdam, 2009. https://doi.org/10.3233/978-1-60750-034-6-67 (last check 2022-08-11)

Westermann, R.; Heise, E.; Spies, K.: FB-SZ-K-Kurzfragebogen zur Erfassung der Studienzufriedenheit [FB-SZ-K Short Questionnaire for the Assessment of Study Satisfaction]. In: Leibniz-Institut für Psychologie (ZPID) (Ed.): Open Test Archive. ZPID, Trier, 2018. https://doi.org/10.23668/psycharchives.4654 (last check 2022-08-11)

Wilcox, P.; Winn, S.; Fyvie‐Gauld, M.: ‘It was nothing to do with the university, it was just the persons’: The role of social support in the first‐year experience of higher education. In: Studies in Higher Education, 30, 6, 2005, pp. 707–722. https://doi.org/10.1080/03075070500340036 (last check 2022-08-11)

Zheng, Z.; Pinkwart, N.: A discrete particle swarm optimization approach to compose heterogeneous learning groups. In: Sampson, D. G.; Spector, J. M.; Chen, N.-S.; Kinshuk, R. H. (Eds.): Proceedings of the 14th International Conference on Advanced Learning Technologies, 2014, pp. 49–51. IEEE, Athens, Greece, 2014. https://doi.org/10.1109/ICALT.2014.24 (last check 2022-08-11)